Robotics and Perception Group

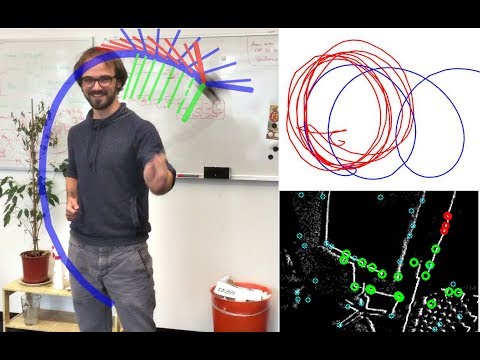

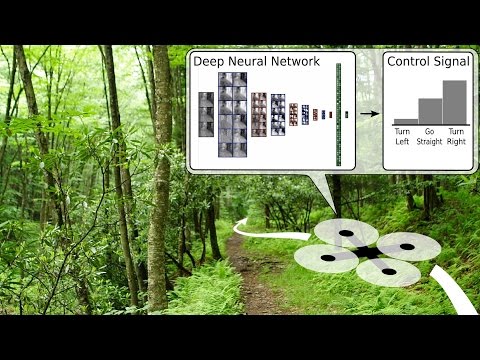

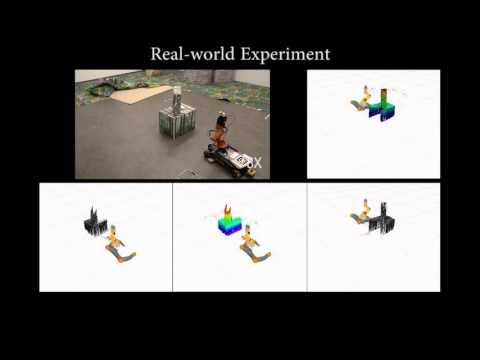

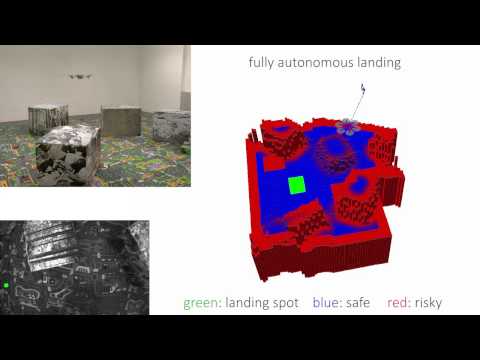

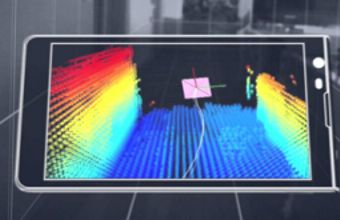

Welcome to the website of the Robotics and Perception Group led by Prof. Dr. Davide Scaramuzza. Our lab was founded in February 2012 and is part of the Department of Informatics at the University of Zurich. Our mission is to develop autonomous machines that can navigate all by themselves using only onboard cameras, without relying on external infrastructure, such as GPS or motion capture systems. Our interests encompass both ground and micro flying robots, as well as multi-robot heterogeneous systems consisting of the combination of these two. We do not want our machines to be passive, but active, in that they should react to and navigate within their environment so as to gain the best knowledge from it.

Follow us on Google+, Scholar, Github, and youTube:

News

-

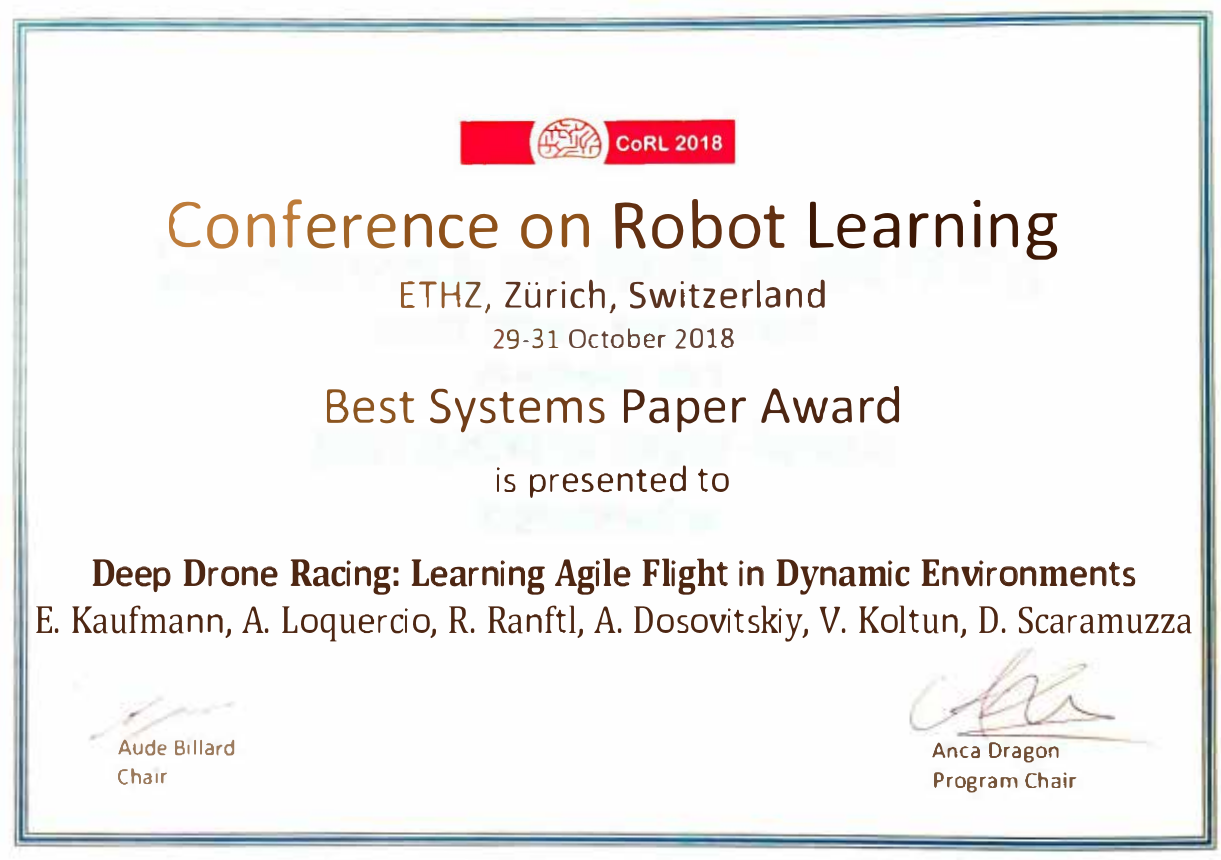

CoRL 2018 Best System Paper Award

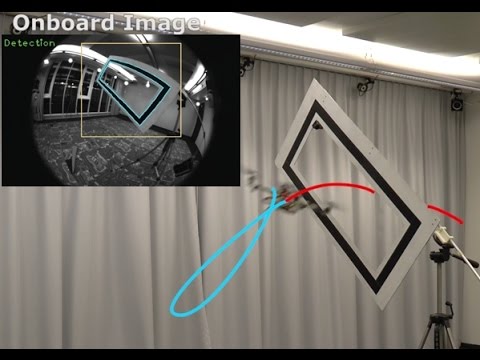

Our paper Deep Drone Racing: Learning Agile Flight in Dynamic Environments won the Best Systems Paper Award at the Conference on Robotic Learning (CoRL) 2018.

-

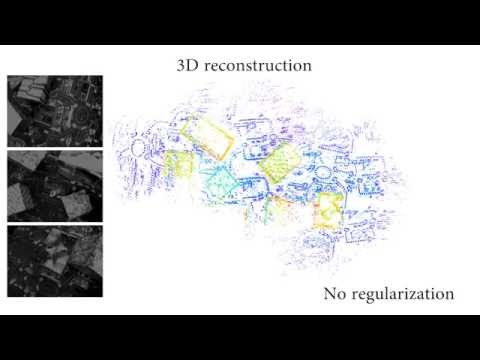

Code Release - EMVS: Event-based Multi-View Stereo

Our paper Deep Drone Racing: Learning Agile Flight in Dynamic Environments won the Best Systems Paper Award at the Conference on Robotic Learning (CoRL) 2018.

-

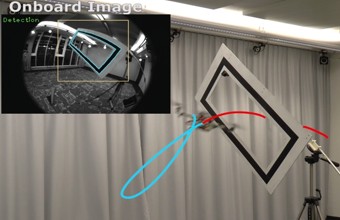

RPG won the IROS 2018 Autonomous Drone Race

We are proud to announce that our team won the IROS Autonomous Drone Race Competition, passing all 8 gates in just 30 seconds! In order to succeed, we combined deep networks, local VIO, Kalman filtering, and optimal control. Watch our performance here

-

Oculus Quest is out

Mark Zuckerberg just announced the new Oculus VR headset, called Oculus Quest. This is that our former lab startup, Zurich Eye, now Oculus Zurich has been working on for the past two years. Watch the video.

-

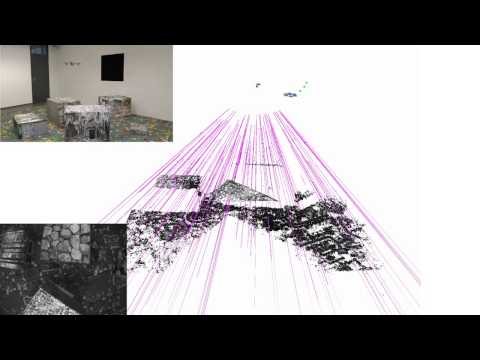

RPG live demo at Langen Nacht der Zurcher Museen

We performed a live quadrotor demo at the Zurich Kunsthalle during the Langen Nacht der Zurcher Museen, as part of the 100 Ways of Thinking show, in front of more than 200 people. Check out the media coverage here.

-

-

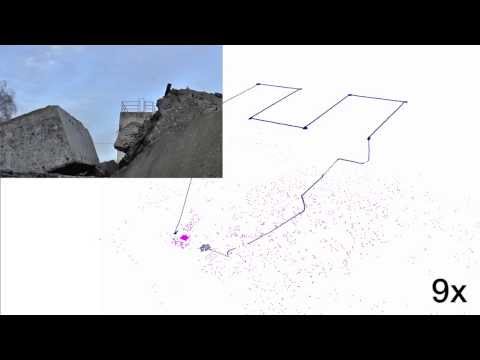

Huge media coverage for search and rescue demonstration

Our lab received great Swiss media attention (NZZ, SwissInfo, SRF) for our live flight demonstration of a quadrotor entering a collapsed building to simulate a search and rescue operation. Check out the video here.

-

RPG research featured on NewScientist

Our research on autonomous drone racing was featured on NewScientist. Check out the article here.

-

Paper accepted in RA-L 2018

Our paper about safe quadrotor navigation computing forward reachable sets was accepted for publication in the Robotics and Automation Letters (RA-L) 2018. Check out the PDF.

-

Paper accepted at RSS 2018

Our paper about drone racing was accepted to RSS 2018 in Pittsburgh! Check out the long version, short version and the video!

-

Paper accepted in IEEE TRO!

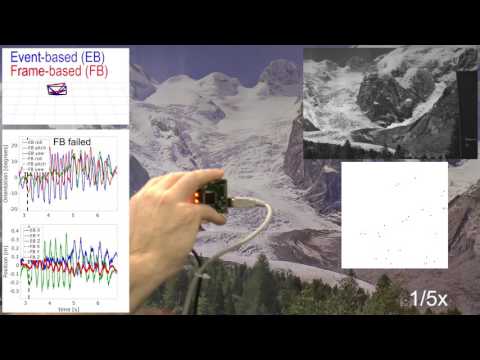

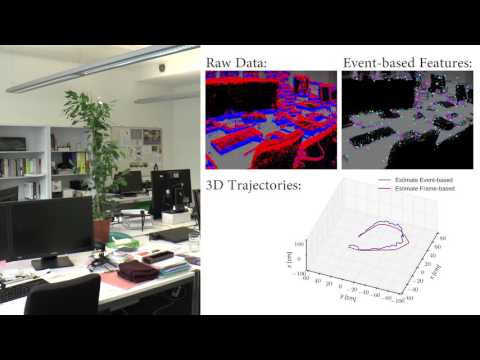

Our paper on Continuous-Time Visual-Inertial Odometry for Event Cameras has been accepted for publication at IEEE Transactions of Robotics. Check out the paper.

-

-

RPG receives 2017 IEEE Transactions on Robotics (TRO) best paper award

Our paper on IMU pre-integration received the 2017 IEEE Transactions on Robotics (TRO) best paper award at ICRA 2018 in Brisbane, Australia. Check out the paper here!

-

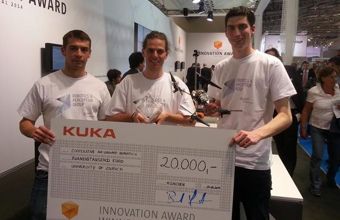

IEEE TRO Best Paper Award

We are proud to announce that our paper on IMU pre-integration will receive the 2017 IEEE Transactions on Robotics (TRO) best paper award. On this occasion, IEEE made the article open access for the next ten years!

C. Forster, L. Carlone, F. Dellaert, D. Scaramuzza

On-Manifold Preintegration for Real-Time Visual-Inertial Odometry

IEEE Transactions on Robotics, vol 33, no. 1, pp. 1-21, Feb. 2017.

PDF DOI YouTube -

Qualcomm Innovation Fellowship

Henri Rebecq, a PhD student in our lab, won a Qualcomm Innovation Fellowship with his proposal "Learning Representations for Low-Latency Perception with Frame and Event-based Cameras"!

-

Release of NetVLAD in Python/Tensorflow

We are happy to announce a Python/Tensorflow port of the FULL NetVLAD network, approved by the original authors and available here (see also our software/datasets page). The repository contains code which allows plug-and-play python deployment of the best off-the-shelf model made available by the authors. We have thoroughly tested that the ported model produces a similar output to the original Matlab implementation, as well as excellent place recognition performance on KITTI 00.

-

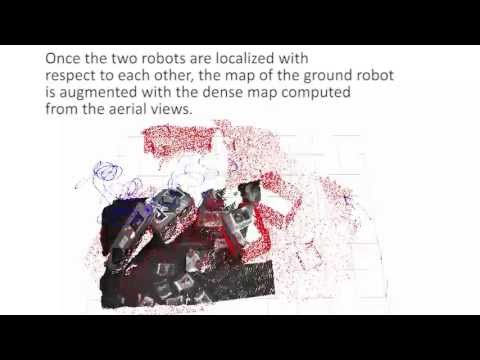

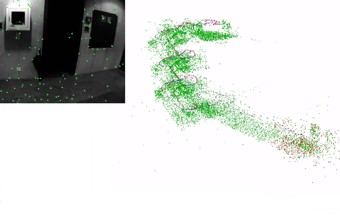

Release of Data-Efficient Decentralized Visual SLAM

We provide the code accompanying our recent Decentralized Visual SLAM paper. The code contains a C++/Matlab simulation containing all building blocks for a state-of-the-art decentralized visual SLAM system. Check out the paper, the Video Pitch, the presentation and the code.

-

Release of the RPG Quadrotor Control Framework

We provide a complete framework for flying quadrotors based on control algorithms developed by the Robotics and Perception Group. We also provide an interface to the RotorS Gazebo plugins to use our algorithms in simulation. Check out our software page for more details.

-

Release of the Fast Event-based Corner Detector

We provide the code of our FAST event-based corner detector. Our implementation is capable of processing millions of events per second on a single core (less than a micro-second per event) and reduces the event rate by a factor of 10 to 20. Check out our Paper, video, and code.

-

Davide Scaramuzza gives an invited seminar at Princeton University