Sensor Calibration

We work on intrinsic calibration of different sensors, such as omnidirectional and event-based cameras. We also work on calibration between an omnidirectional camera and 3D laser range finder.

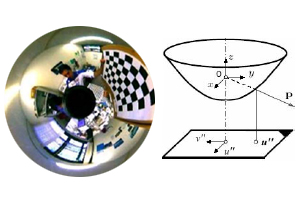

Omnidirectional Camera Modelling and Calibration

A unified model for central omnidirectional cameras (both with mirror or fisheye) and a novel calibration method which uses checkerboard patterns. Omnidirectional Camera Calibration Toolbox for MATLAB.

Read more

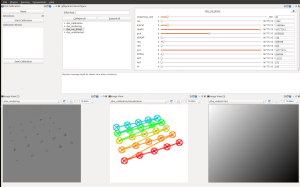

ROS Driver and Calibration Tool for the Dynamic Vision Sensor (DVS)

The RPG DVS ROS Package allow to use the Dynamic Vision Sensor (DVS) within the Robot Operating System (ROS). It also contains a calibration tool for intrinsic and stereo calibration using a blinking pattern.

The code with instructions on how to use it is hosted on GitHub.

Authors: Elias Mueggler, Basil Huber, Luca Longinotti, Tobi Delbruck.

References

- E. Mueggler, B. Huber, D. Scaramuzza. Event-based, 6-DOF Pose Tracking for High-Speed Maneuvers, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, 2014. [PDF (PDF, 926 KB)]

- A. Censi, J. Strubel, C. Brandli, T. Delbruck, D. Scaramuzza. Low-latency localization by Active LED Markers tracking using a Dynamic Vision Sensor, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, 2013. (PDF) [PDF (PDF, 1 MB)]

- P. Lichtsteiner, C. Posch, T. Delbruck. A 128x128 120dB 15us Latency Asynchronous Temporal Contrast Vision Sensor, IEEE Journal of Solid State Circuits, Feb. 2008, 43(2), 566-576. [PDF]

Self Calibration between a Camera and a 3D Scanner from Natural Scenes

References

- D. Scaramuzza, A. Harati, R. Siegwart. Extrinsic Self Calibration of a Camera and a 3D Laser Range Finder from Natural Scenes. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2007), San Diego, USA, October 2007. [PDF (PDF, 510 KB)]

- D. Scaramuzza, R. Siegwart, and A. Martinelli. A Robust Descriptor for Tracking Vertical Lines in Omnidirectional Images and its Use in Mobile Robotics. International Journal on Robotics Research, Volume 28, issue 2, February, 2009. [PDF (PDF, 2 MB)]