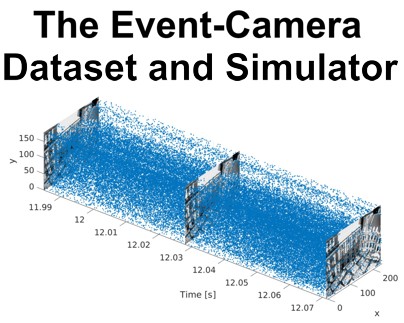

The Event-Camera Dataset and Simulator

Event-based Data for Pose Estimation, Visual Odometry, and SLAM

This presents the world's first collection of datasets with an event-based camera for high-speed robotics. The data also include intensity images, inertial measurements, and ground truth from a motion-capture system. An event-based camera is a revolutionary vision sensor with three key advantages: a measurement rate that is almost 1 million times faster than standard cameras, a latency of 1 microsecond, and a high dynamic range of 130 decibels (standard cameras only have 60 dB). These properties enable the design of a new class of algorithms for high-speed robotics, where standard cameras suffer from motion blur and high latency. All the data are released both as text files and binary (i.e., rosbag) files.

Links:

More on event-based vision research at our lab

Tutorial on event-based vision

Citing

When using the data in an academic context, please cite the following paper.

|

|

The Event-Camera Dataset and Simulator: Event-based Data for Pose Estimation, Visual Odometry, and SLAM International Journal of Robotics Research, Vol. 36, Issue 2, pages 142-149, Feb. 2017. |

Dataset Format

We provide all datasets in two formats: text files and binary files (rosbag). While their content is identical, some of them are better suited for particular applications. For prototyping, inspection, and testing we recommend to use the text files, since they can be loaded easily using Python or Matlab. The binary rosbag files are intended for users familiar with the Robot Operating System (ROS) and for applications that are intended to be executed on a real system. The format is the one used by the RPG DVS ROS driver. The details about both format follows below.

Text Files

The format of the text files is as follows.

- events.txt: One event per line (timestamp x y polarity)

- images.txt: One image reference per line (timestamp filename)

- images/00000001.png: Images referenced from images.txt

- imu.txt: One measurement per line (timestamp ax ay az gx gy gz)

- groundtruth.txt: One ground truth measurements per line (timestamp px py pz qx qy qz qw)

- calib.txt: Camera parameters (fx fy cx cy k1 k2 p1 p2 k3)

Binary Files (rosbag)

The rosbag files contain the events using dvs_msgs/EventArray message types. The images, camera calibration, and IMU measurements use the standard sensor_msgs/Image, sensor_msgs/CameraInfo, and sensor_msgs/Imu message types, respectively. Ground truth is provided as geometry_msgs/PoseStamped message type.

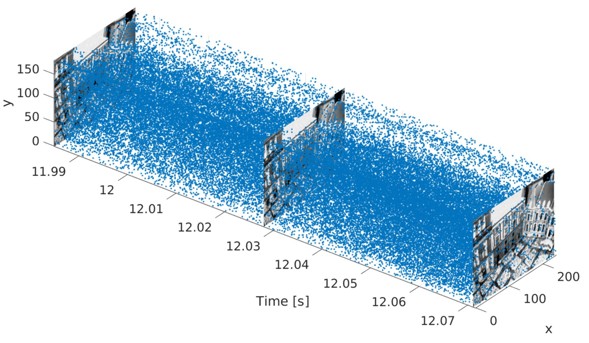

Plots

We provide various plots for each dataset for a quick inspection. The plots are available inside a ZIP file and contain, if available, the following quantities:

- the event rate (in events/s). In spite of the sensor being asynchronous, and therefore does not have a well-defined event rate, we provide a measurement of such a quantity by computing the rate of events using intervals of fixed duration (1 ms),

- the IMU linear acceleration (in m/s²) along each axis, in the camera frame,

- the IMU gyroscopic measurement (angular velocity, in degrees/s) in the camera frame,

- the ground truth pose of the camera (position and orientation), in the frame of the motion-capture system,

- the ground truth pose of the camera (position and orientation), with respect to the first camera pose, i.e., in the camera frame.

The orientation is provided using Euler angles, according to the ZYX convention (default of MATLAB).

DAVIS 240C Datasets

These datasets were generated using a DAVIS240C from iniLabs. They contain the events, images, IMU measurements, and camera calibration from the DAVIS as well as ground truth from a motion-capture system. The datasets using a motorized linear slider neither contain motion-capture information nor IMU measurements, however ground truth is provided by the linear slider's position. All datasets in gray use the same intrinsic calibration and the "calibration" dataset provides the option to use other camera models.

| Name | Motion | Scene | Download | Refs |

|---|---|---|---|---|

| shapes_rotation | Rotation, increasing speed |

Simple shapes on a wall | Text (zip) rosbag Plots (zip) |

|

| shapes_translation | Translation, increasing speed |

Simple shapes on a wall | Text (zip) rosbag Plots (zip) |

|

| shapes_6dof | 6-DOF, increasing speed |

Simple shapes on a wall | Text (zip) rosbag Plots (zip) |

|

| poster_rotation | Rotation, increasing speed |

Wallposter | Text (zip) rosbag Plots (zip) |

|

| poster_translation | Translation, increasing speed |

Wallposter | Text (zip) rosbag Plots (zip) |

|

| poster_6dof | 6-DOF, increasing speed |

Wallposter | Text (zip) rosbag Plots (zip) |

|

| boxes_rotation | Rotation, increasing speed |

Highly textured environment | Text (zip) rosbag Plots (zip) |

|

| boxes_translation | Translation, increasing speed |

Highly textured environment | Text (zip) rosbag Plots (zip) |

|

| boxes_6dof | 6-DOF, increasing speed |

Highly textured environment | Text (zip) rosbag Plots (zip) |

|

| hdr_poster | 6-DOF, increasing speed |

Flat scene, high dynamic range | Text (zip) rosbag Plots (zip) |

|

| hdr_boxes | 6-DOF, increasing speed |

Boxes, high dynamic range | Text (zip) rosbag Plots (zip) |

|

| outdoors_walking | 6-DOF, walking |

Sunny urban environment | Text (zip) rosbag Plots (zip) |

|

| outdoors_running | 6-DOF, running |

Sunny urban environment | Text (zip) rosbag Plots (zip) |

|

| dynamic_rotation | Rotation, increasing speed |

Office with moving person | Text (zip) rosbag Plots (zip) |

|

| dynamic_translation | Translation, increasing speed |

Office with moving person | Text (zip) rosbag Plots (zip) |

|

| dynamic_6dof | 6-DOF, increasing speed |

Office with moving person | Text (zip) rosbag Plots (zip) |

|

| calibration | 6-DOF, slow |

Checkerboard (6x7, 70mm), for custom calibrations, only applies to above datasets in gray |

Text (zip) rosbag Plots (zip) |

|

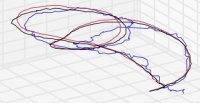

| office_zigzag | 6-DOF, zigzag, slow |

Office environment | Text (zip) rosbag Plots (zip) |

[1] |

| office_spiral | 6-DOF, spiral, slow |

Office environment | Text (zip) rosbag Plots (zip) |

[1] |

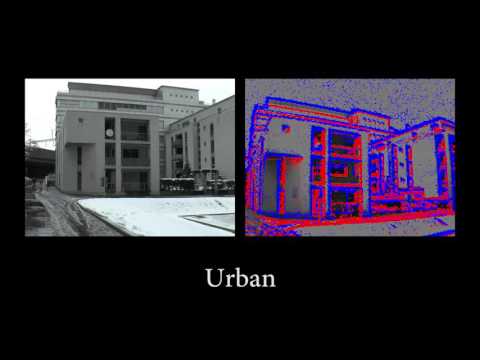

| urban | Linear, slow |

Urban environment | Text (zip) rosbag Plots (zip) |

[1] |

| slider_close | Linear, constant speed |

Flat scene at 23.1 cm | Text (zip) rosbag Plots (zip) |

[2] |

| slider_far | Linear, constant speed |

Flat scene at 58.4 cm | Text (zip) rosbag Plots (zip) |

[2] |

| slider_hdr_close | Linear, constant speed |

Flat scene at 23.1 cm, HDR | Text (zip) rosbag Plots (zip) |

[2] |

| slider_hdr_far | Linear, constant speed |

Flat scene at 58.4 cm, HDR | Text (zip) rosbag Plots (zip) |

[2] |

| slider_depth | Linear, constant speed |

Objects at different depths | Text (zip) rosbag Plots (zip) |

[2] |

Synthetic Datasets

We provide two synthetic scenes. These datasets were generated using the event-camera simulator described below.

| Name | Motion | Scene | Download | Refs |

|---|---|---|---|---|

| simulation_3planes | Translation, circle | 3 planes at different depths | Text (zip) rosbag Plots (zip) |

[2] |

| simulation_3walls | 6-DOF | Room with 3 textured walls | Text (zip) rosbag Plots (zip) |

[2] |

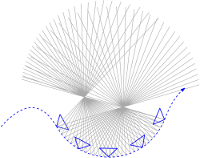

Event-Camera Simulator

In addition to the datasets, we also release a simulator based on Blender to generate synthetic datasets. The simulator is useful to prototype visual-odometry or event-based feature tracking algorithms. It is described in more detail in the accompanying paper. The contrast threshold is configurable. However, it does currently not feature a model of the sensor noise. The simulator can be found on GitHub and includes a ready-to-run example.

References that used these Datasets

These references point to papers that used these datasets in their experiments. If you used these data, please send your paper to mueggler (at) ifi (dot) uzh (dot) ch.

|

|

Low-Latency Visual Odometry using Event-based Feature Tracks IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, 2016. Best Application Paper Award Finalist! Highlight Talk: Acceptance Rate 2.5% |

|

|

EMVS: Event-based Multi-View Stereo British Machine Vision Conference (BMVC), York, 2016. Best Industry Paper Paper Award! Oral Talk: Acceptance Rate 7% |

License

This datasets are released under the Creative Commons license (CC BY-NC-SA 3.0), which is free for non-commercial use (including research).

Acknowledgements

This work was supported by the DARPA FLA Program, the Google Faculty Research Award, the Qualcomm Innovation Fellowship, the National Centre of Competence in Research Robotics (NCCR), the Swiss National Science Foundation, and the UZH Forschungskredit.