Navigation auf uzh.ch

Navigation auf uzh.ch

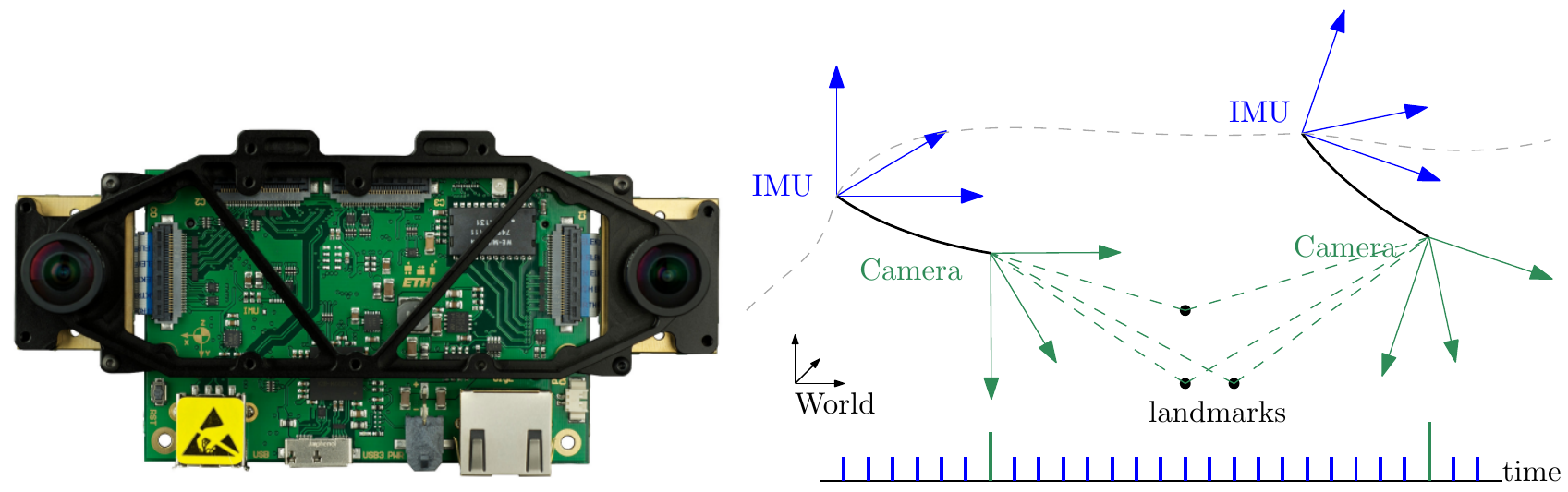

Visual-Inertial odometry (VIO) is the process of estimating the state (pose and velocity) of an agent (e.g., an aerial robot) by using only the input of one or more cameras plus one or more Inertial Measurement Units (IMUs) attached to it. VIO is the only viable alternative to GPS and lidar-based odometry to achieve accurate state estimation. Since both cameras and IMUs are very cheap, these sensor types are ubiquitous in all today's aerial robots.

Reference

|

|

D. Scaramuzza, Z. Zhang Visual-Inertial Odometry of Aerial Robots Encyclopedia of Robotics, Springer, 2019 |

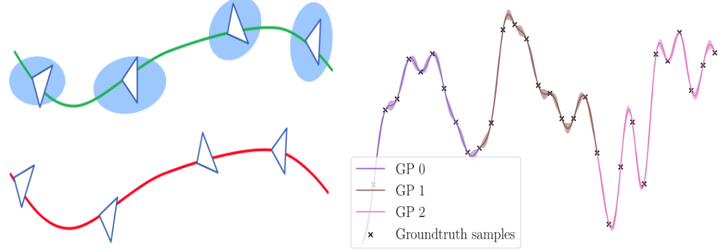

Despite the existence of different error metrics for trajectory evaluation in SLAM, their theoretical justifications and connections are rarely studied, and few methods handle temporal association properly. In this work, we propose to formulate the trajectory evaluation problem in a probabilistic, continuous-time framework. By modeling the groundtruth as random variables, the concepts of absolute and relative error are generalized to be likelihood. Moreover, the groundtruth is represented as a piecewise Gaussian Process in continuous-time. Within this framework, we are able to establish theoretical connections between relative and absolute error metrics and handle temporal association in a principled manner.

Reference

|

|

Z. Zhang, D. Scaramuzza Rethinking Trajectory Evaluation for SLAM: a Probabilistic, Continuous-Time Approach ICRA19 Workshop on Dataset Generation and Benchmarking of SLAM algorithms for Robotics and VR/AR, Montreal, 2019. Best Paper Award! |

For mobile robots to localize robustly, actively considering the perception requirement at the planning stage is essential. In this paper, we propose a novel representation for active visual localization. By formulating the Fisher information and sensor visibility carefully, we are able to summarize the localization information into a discrete grid, namely the Fisher information field. The information for arbitrary poses can then be computed from the field in constant time, without the need of costly iterating all the 3D landmarks. Experimental results on simulated and real-world data show the great potential of our method in efficient active localization and perception- aware planning. To benefit related research, we release our implementation of the information field to the public.

|

|

Beyond Point Clouds: Fisher Information Field for Active Visual Localization IEEE International Conference on Robotics and Automation (ICRA), 2019. |

In this tutorial, we provide principled methods to quantitatively evaluate the quality of an estimated trajectory from visual(-inertial) odometry (VO/VIO), which is the foundation of benchmarking the accuracy of different algorithms. First, we show how to determine the transformation type to use in trajectory alignment based on the specific sensing modality (i.e., monocular, stereo and visual-inertial). Second, we describe commonly used error metrics (i.e., the absolute trajectory error and the relative error) and their strengths and weaknesses. To make the methodology presented for VO/VIO applicable to other setups, we also generalize our formulation to any given sensing modality. To facilitate the reproducibility of related research, we publicly release our implementation of the methods described in this tutorial.

Reference

|

|

Z. Zhang, D. Scaramuzza A Tutorial on Quantitative Trajectory Evaluation for Visual(-Inertial) Odometry IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, 2018. PDF (PDF, 483 KB) PPT (PPTX, 7 MB) VO/VIO Evaluation Toolbox |

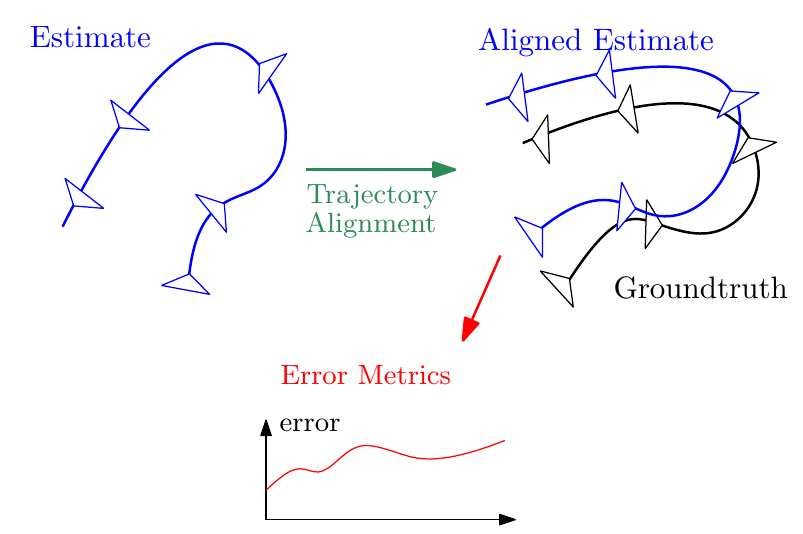

It is well known that visual-inertial state estimation is possible up to a four degrees-of-freedom (DoF) transformation rotation around gravity and translation), and the extra DoFs ("gauge freedom") have to be handled properly. While different approaches for handling the gauge freedom have been used in practice, no previous study has been carried out to systematically analyze their differences. In this paper, we present the first comparative analysis of different methods for handling the gauge freedom in optimization-based visual-inertial state estimation. We experimentally compare three commonly used approaches: fixing the unobservable states to some given values, setting a prior on such states, or letting the states evolve freely during optimization. Specifically, we show that (i) the accuracy and computational time of the three methods are similar, with the free gauge approach being slightly faster; (ii) the covariance estimation from the free gauge approach appears dramatically different, but is actually tightly related to the other approaches. Our findings are validated both in simulation and on real-world datasets and can be useful for designing optimization-based visual-inertial state estimation algorithms.

Reference

|

|

Z. Zhang, G, Gallego, D. Scaramuzza On the Comparison of Gauge Freedom Handling in Optimization-based Visual-Inertial State Estimation IEEE Robotics and Automation Letters (RA-L), 2018. |

Flying robots require a combination of accuracy and low latency in their state estimation in order to achieve stable and robust flight. However, due to the power and payload constraints of aerial platforms, state estimation algorithms must provide these qualities under the computational constraints of embedded hardware. Cameras and inertial measurement units (IMUs) satisfy these power and payload constraints, so visual-inertial odometry (VIO) algorithms are popular choices for state estimation in these scenarios, in addition to their ability to operate without external localization from motion capture or global positioning systems. It is not clear from existing results in the literature, however, which VIO algorithms perform well under the accuracy, latency, and computational constraints of a flying robot with onboard state estimation. This paper evaluates an array of publicly-available VIO pipelines (MSCKF, OKVIS, ROVIO, VINS-Mono, SVO+MSF, and SVO+GTSAM) on different hardware configurations, including several single-board computer systems that are typically found on flying robots. The evaluation considers the pose estimation accuracy, per-frame processing time, and CPU and memory load while processing the EuRoC datasets, which contain six degree of freedom (6DoF) trajectories typical of flying robots. We present our complete results as a benchmark for the research community.

|

|

J. Delmerico, D. Scaramuzza A Benchmark Comparison of Monocular Visual-Inertial Odometry Algorithms for Flying Robots IEEE International Conference on Robotics and Automation (ICRA), 2018. |

In this paper, we propose an active exposure control method to improve the robustness of visual odometry in HDR (high dynamic range) environments. Our method evaluates the proper exposure time by maximizing a robust gradient-based image quality metric. The optimization is achieved by exploiting the photometric response function of the camera. Our exposure control method is evaluated in different real world environments and outperforms both the built-in auto-exposure function of the camera and a fixed exposure time. To validate the benefit of our approach, we test different state-of-the-art visual odometry pipelines (namely, ORB-SLAM2, DSO, and SVO 2.0) and demonstrate significant improved performance using our exposure control method in very challenging HDR environments. Datasets and code will be released soon!

|

|

Z. Zhang, C. Forster, D. Scaramuzza Active Exposure Control for Robust Visual Odometry in HDR Environments IEEE International Conference on Robotics and Automation (ICRA), 2017. |

Recent results in monocular visual-inertial navigation (VIN) have shown that optimization-based approaches outperform filtering methods in terms of accuracy due to their capability to relinearize past states. However, the improvement comes at the cost of increased computational complexity. In this paper, we address this issue by preintegrating inertial measurements between selected keyframes. The preintegration allows us to accurately summarize hundreds of inertial measurements into a single relative motion constraint. Our first contribution is a preintegration theory that properly addresses the manifold structure of the rotation group and carefully deals with uncertainty propagation. The measurements are integrated in a local frame, which eliminates the need to repeat the integration when the linearization point changes while leaving the opportunity for belated bias corrections. The second contribution is to show that the preintegrated IMU model can be seamlessly integrated in a visual-inertial pipeline under the unifying framework of factor graphs. This enables the use of a structureless model for visual measurements, further accelerating the computation. The third contribution is an extensive evaluation of our monocular VIN pipeline: experimental results confirm that our system is very fast and demonstrates superior accuracy with respect to competitive state-of-the-art filtering and optimization algorithms, including off-the-shelf systems such as Google Tango.

|

|

On-Manifold Preintegration for Real-Time Visual-Inertial Odometry IEEE Transactions on Robotics, in press, 2016.

|

|

IMU Preintegration on Manifold for Efficient Visual-Inertial Maximum-a-Posteriori Estimation Robotics: Science and Systems (RSS), Rome, 2015. Best Paper Award Finalist! Oral Presentation: Acceptance Rate 4%PDF (PDF, 2 MB) Supplementary material (PDF, 498 KB) YouTube |

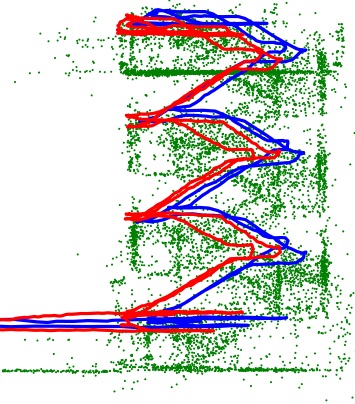

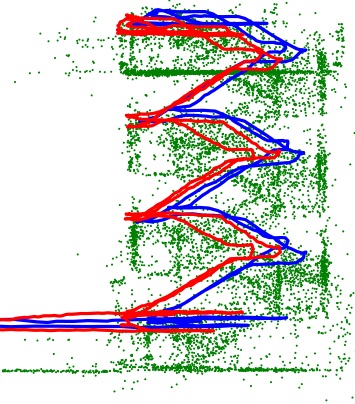

We propose a semi-direct monocular visual odometry algorithm that is precise, robust, and faster than current state-of-the-art methods. The semi-direct approach eliminates the need of costly feature extraction and robust matching techniques for motion estimation. Our algorithm operates directly on pixel intensities, which results in subpixel precision at high frame-rates. A probabilistic mapping method that explicitly models outlier measurements is used to estimate 3D points, which results in fewer outliers and more reliable points. Precise and high frame-rate motion estimation brings increased robustness in scenes of little, repetitive, and high-frequency texture. The algorithm is applied to micro-aerial-vehicle stateestimation in GPS-denied environments and runs at 55 frames per second on the onboard embedded computer and at more than 300 frames per second on a consumer laptop.

This video shows results from a modification of the SVO algorithm that generalizes to a set of rigidly attached (not necessarily overlapping) cameras. Simultaneously, we run a CPU implementation of the REMODE algorithm on the front, left, and right camera. Everything runs in real-time on a laptop computer. Parking garage dataset courtesy of NVIDIA.

|

|

SVO: Semi-Direct Visual Odometry for Monocular and Multi-Camera Systems IEEE Transactions on Robotics and Automation, to appear, 2016. Includes comparison against ORB-SLAM, LSD-SLAM, and DSO and comparison among Dense, Semi-dense, and Sparse Direct Image Alignment. |

|

SVO: Fast Semi-Direct Monocular Visual Odometry IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, 2014.

|

|

REMODE: Probabilistic, Monocular Dense Reconstruction in Real Time IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, 2014. |

This video shows the estimation of the vehicle motion from image features. The video demonstrate the approach described in our paper which uses 1-point RANSAC algorithm to remove the outliers. Except for the features extraction process, the outlier removal and the motion estimation steps take less than 1ms on a normal laptop computer.