SOFAS - SOFtware Analysis Services

Successful software systems must change or they become progressively less useful, but as they evolve, they become more complex and consequently more resources are needed to preserve and simplify their structure. Studies estimate the costs for the maintenance and evolution of large, complex software systems from 50% to 95% of the total costs in the software life-cycle. To reduce these costs several techniques and tools have been developed: to discover components that need to be modified when a new feature is integrated, to detect architectural shortcomings, or for project managers to estimate the maintenance costs and allow for better planning.

These software analysis tools focus on just a particular kind of analysis to produce the results wanted by the engineer. For every required analysis, a specialized tool, with its own explicit or implicit meta-model dictating how to represent the input and the output, has to be installed, configured and executed. Thus the sharing of information between tools is only possible by means of a cumbersome export towards files complying to a specified exchange format. Even if different analyses of the same kind exist, there is no way to compare their results or integrate them other than manual investigation. Tool interoperability is hampered even more by their stand-alone nature, as well as their platform and language dependence.

Thus, the combination and integration of different software analysis tools is a challenging problem when we need to gain a deeper insight into a software system evolution. We claim that this status quo severely hampers software evolution research and a critical assessment of the research fields uncovers the fact that people keep re-inventing the same wheels with little advancement of the field as a whole.

SOFAS' (SOFware Analysis Services) purpose is to solve this problem by devising a distributed and collaborative software analysis platform to allow for interoperability of software analysis tools across platform, geographical and organizational boundaries. Such tools are mapped into a software analysis taxonomy and adhere to specific meta-models and ontologies for their category of analysis and offer a common service interface that enables their composite use on the Internet. These distributed analysis services are accessible through an incrementally augmented software analysis catalog, where organizations and research groups can register and share their tools.

The Architecture

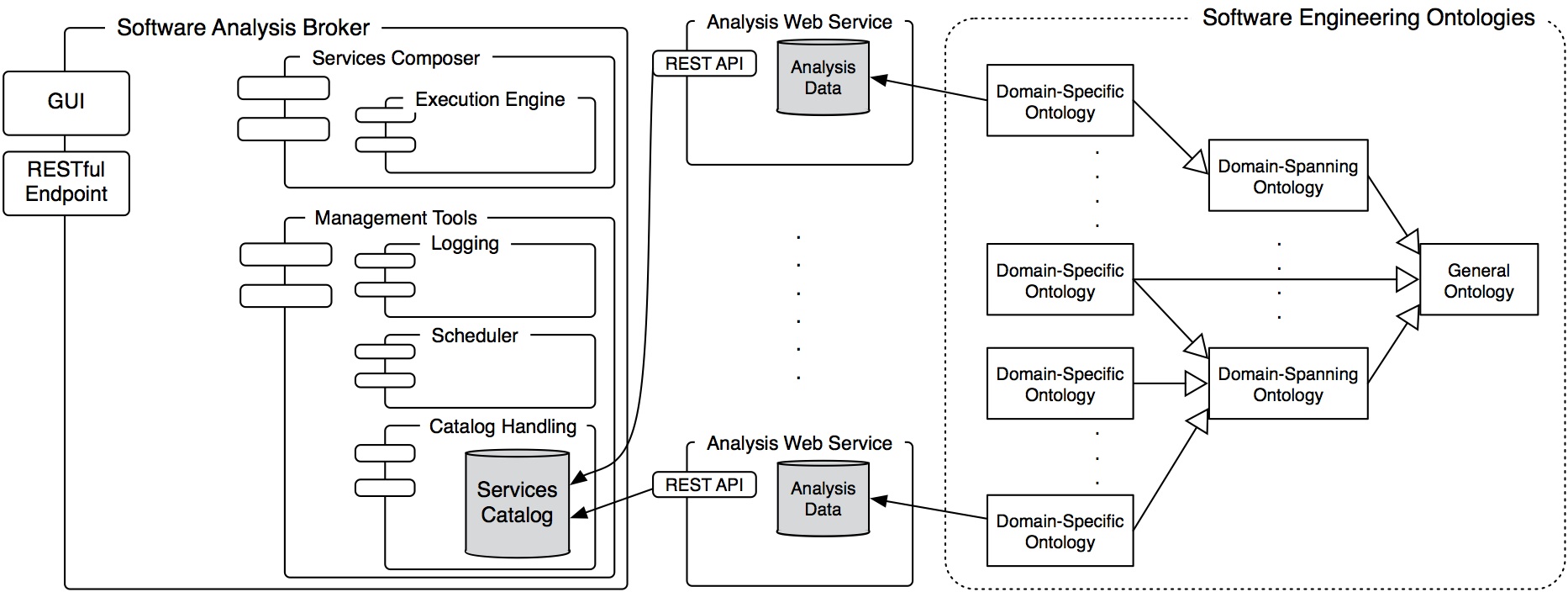

SOFAS is a RESTful architecture offering a simple yet effective way to provide software analyses. It is based on the principles of Representational State Transfer around resources on the web. The figure underneath gives an overview of the architecture, which is made up by three main constituents: Software Analysis Web Services, a Software Analysis Broker, and Software Analysis Ontologies. Software Analysis Web Services expose the functionality and data of software (evolution) analyses through a standard RESTful web service interface. The Software Analysis Broker acts as the services manager and the interface between the services and the users. It contains a catalog of all the registered analysis services. Ontologies define and represent the data consumed and produced by the different services. In the following, we briefly describe each of these three components:

Software Analysis Web Services

SOFAS’ purpose is to provide software analyses and the data they produce in a simple, standardized way, freeing them from specific IDEs, platforms and languages. From a user’s perspective, software analyses are inherently linear and uniform in the way they work. Given some information about a software project (be it the code, its source code repository, some data already calculated by an analysis, etc.) and possible analysis calibration settings, they extract and/or calculate their specific data. Once that is completed, the results can be fetched in different, specific formats and, when needed, they can also be updated or deleted. Given these premises, RESTful services perfectly fit our needs. A RESTful web service provides a uniform interface to the clients, no matter what it actually does. It is a collection of resources all identified by URIs, which can be accessed and manipulated with HTTP methods (e.g., POST, GET, PUT or DELETE). Furthermore, every message exchanged is self-descriptive as it always contains the Internet media type of the content, which is enough to describe how to process it. In our case, the analyses services boil down to simply two resources: the service itself and the individual analyses.

These analyses can be classified into three main categories: data gatherers, basic software evolution analyses and composite software evolution analyses.

- Data Gatherers work on raw data to extract evolution information from different software repositories, such as version control, issue tracking, mailing lists, or plain source code, and import it into SOFAS for other analyses to use it. Gathering this data can be extremely time consuming, as project histories can consist of several years of active development. However, this is a vital step for any analysis, as it provides the necessary software project data to work on. The following data gatherers are registered in SOFAS:

Version history importers for CVS, SVN, GIT and Mercurial. They extract the version control information comprising release, revision, and commit data from a given version control repository.

Issue tracking history importers for Bugzilla, Google Code, Trac, and Source- Forge. They extract the issue tracking history from a given issue tracker instance.

GNU Mailman importer. It extracts communication data from a given GNUMailman-based mailing list.

Meta-model extractors for Java and C#. They extract the static source code structure of a software project, based on the FAMIX meta-model.

- Basic Software Evolution Analyses exploit the data imported by one data gatherer to calculate all sorts of software evolution information: version history metrics, code metrics of specific releases/revisions, issue tracking metrics, etc. The analyses currently registered are:

Version history metrics calculator. It calculates several statistics from a given project version history.

Release meta-model extractor. It extracts the static source code structure (based on FAMIX) of one or more specific releases of a software project (written in Java or C#), given its extracted version history.

Code Metrics calculators. They compute some of the most common software metrics (35 as of now) of a software system.

Change type distiller. Given a project version history it extracts, for each revision, all the fine-grained source code changes of each source code file (using the taxonomy defined by Fluri et al.). Change coupling detector. It calculates the change couplings for all the files from a given version control history.

Change coupling history calculator. It calculates the evolution of change couplings over the duration of a given version control history.

Code clones detector. It extracts the code clones from a specific version of a given version control history using JCCD.

Code clones history calculator. It extracts the code clones from a given version control history, by regular intervals defined by the user.

Yesterday’s Weather service. It calculates the Yesterday’s Weather metric from a given version control history.

Code ownership detector. It detects, for each file, which developers "own" it. That is the developers who should know the most about that specific file, based on how much and when they changed it. This information is extracted from a given version control history.

Gini coefficient calculator. It calculates the distribution of changes between the developers in a given version control history using the Gini coefficient, as proposed by Giger et al..

Change metrics-based defect predictor. It calculates the most defect prone files based on the change metrics calculated by the aforementioned change metrics analysis.

- Composite Software Evolution Analyses aggregate data produced by other analyses to calculate more complex and domain spanning evolution information. These are some of the analyses currently registered in SOFAS:

Issue-revision linkers. Given the issue tracking and version histories of a specific software project, they reconstruct the links between issues and the revisions that fixed them. As of now three of them exist, using the heuristics proposed by Mockus et al., Sliwerski et al., and Fischer et al..

Code Disharmonies detector. It detects all the code disharmonies proposed by Lanza and Marinescu in a software project using the code metrics extracted by the aforementioned metrics calculators.

Code-churn-based defect predictor. It predicts the most defect prone entities based on the combination of source code metrics calculated for specific snapshots of a given version control history. It is based on the algorithm proposed by D’Ambros et al..

Bug Cache defect predictor. Given the issue tracking and version histories of a specific software project and the links between them (detected by one of the aforementioned linkers), it predicts further faults, based on the algorithm developed by Kim et al..

Metrics-based defect predictor. It predicts the most defect prone entities the combination of source code metrics calculated by the aforementioned analyses.

Email-Source code linker. It links emails with source code given version history and mailing list information extracted by the associated data gatherers.

Service Analysis Broker

It acts as a layer between the services and the users, so that they do not have to interact directly with the raw services. It plays a vital role in facilitating the use of the services in an effective and meaningful way. The broker is made up of four main components:

- Analyses Catalog. It stores and classifies all the registered analysis services so they can be discovered, invoked, and the analysis data they produced fetched. We developed a software analysis taxonomy to systematically classify existing and future services. This taxonomy divides the possible analyses into three main categories: development process, underlying models, and source code (more details available here). This taxonomy is also defined as an OWL ontology. This allows us to have a very complex and rich service classification. Furthermore, SPARQL can be used to query the catalog and fetch specific services. With it, services can be queried based on what categories they belong to, on any of their attributes, on the attributes of any of the categories they belong to, etc.

- Services Composer. It takes care of translating the workflows defined through UI by the user into a real, executable workflow. Having the composition definition and the actual composition language decoupled, allows the user to compose services in an intuitive way, hiding the complexity and technicalities of the actual composition and orchestration. SOFAS uses its own custom service composition language called SCoLa (SOFAS Composition Language), a simplified and slightly customized version of WS-BPEL.

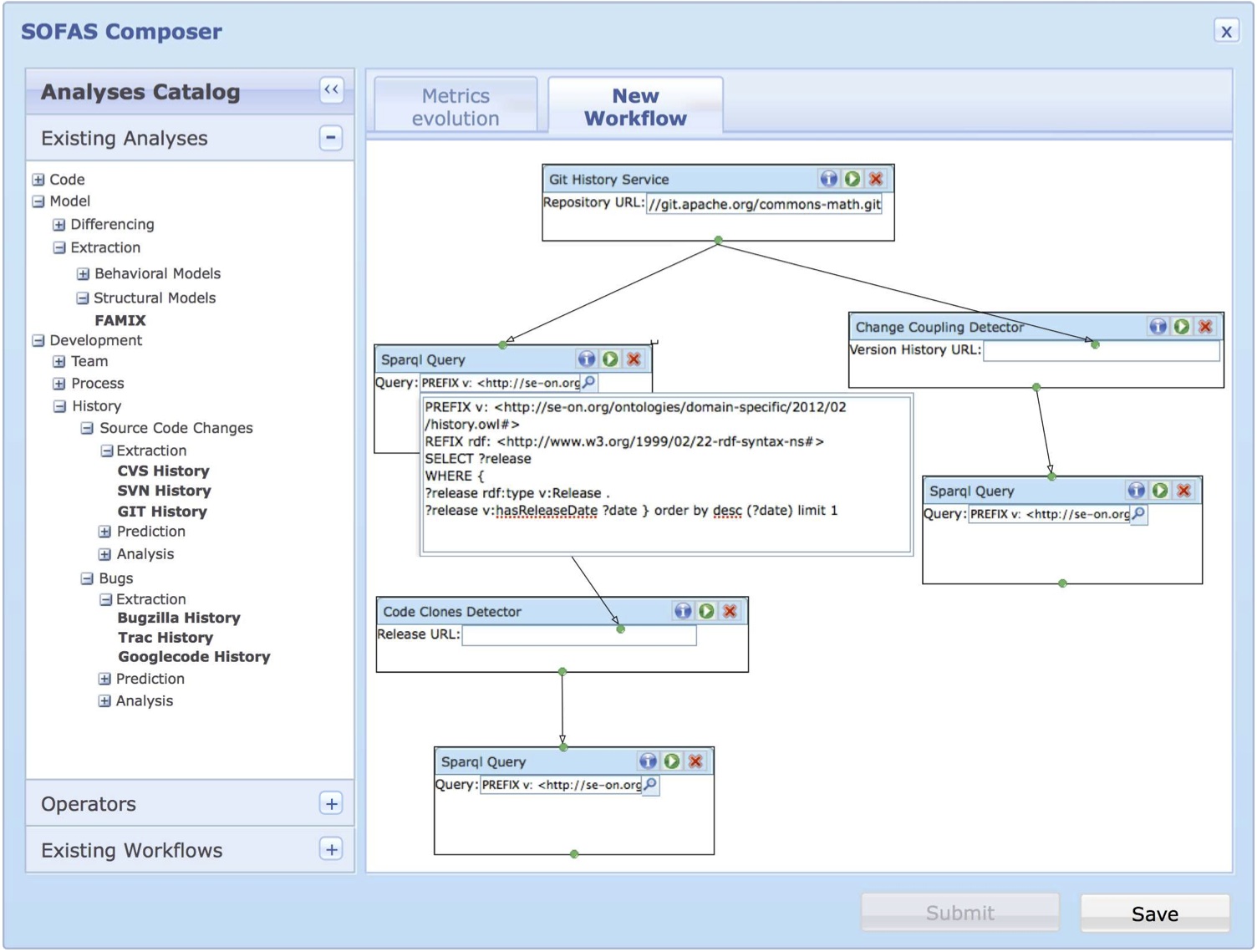

- User Interface. It is the actual access point to the Software Analysis Broker. It consists of a web GUI, meant for human users and a series of RESTful service endpoints to be (semi)-automatically used by applications. Through it, the user can browse the Services Catalog to find the needed analyses, compose them and eventually run them. The user can also pick from some already predefined combinations of analysis services provided as high level analyses workflows (called analysis blueprints). Services can be combined into SCoLa workflows in a intuitive, high level and graphical “pipe and filter” fashion, as shown in the figure below.

-

- Services Management Tools. A workflow is not just a mere collection of services called one after another. In particular, this holds when long running, asynchronous web services are involved. In order to effectively execute it, every single service needs to be logged and monitored to check if it is up and running, if it is in an erroneous state and why, if it completed a required operation, etc. These functionalities are vital for end users, but their use should be as transparent, standardized and automated as possible. This is why we implemented a series of services to take care of that. In this way calls to them can be easily and automatically weaved into a user-defined workflow by the Services Composer.

Software Analysis Ontologies

We use ontologies to define and represent the data consumed and produced by the different services. To do so, we developed our own Software Evolution Ontology, called SEON. For a full description of it, please refer to its official web page

Related Publications

- Giacomo Ghezzi and Harald C. Gall "A Framework for Semi-Automated Software Evolution Analysis Composition" Automated Software Engineering, Vol. 20 (3), 2013. (Journal Article).

- Michael Würsch, Giacomo Ghezzi, Matthias Hert, Gerald Reif, Harald C. Gall "SEON - A Family of Ontologies for Software Evolution and its Applications"Computing, Vol. 94 (11), 2012.

- Giacomo Ghezzi and Harald C. Gall "SOFAS : A Lightweight Architecture for Software Analysis as a Service" Working IEEE/IFIP Conference on Software Architecture (WICSA 2011), 20-24 June 2011, Boulder, Colorado, USA 2011.

- Matthias Hert, Giacomo Ghezzi, Michael Würsch, Harald C. Gall "How to 'Make a Bridge to the new Town' using OntoAccess" Proceedings of the 10th International Semantic Web Conference (ISWC) 2011.

- Giacomo Ghezzi and Harald C. Gall "Distributed and Collaborative Software Analysis" Collaborative Software Engineering, Editors: Ivan Mistrik, John Grundy, Jim Whitehead, Andrè van der Hoek, January; 2010, Springer-Verlag.

- Giacomo Ghezzi and Harald C. Gall "SOFAS Architecture" University of Zurich, Department of Informatics, Software Evolution and Architecture Lab 2010.

- Giacomo Ghezzi and Harald C. Gall "Towards Software Analysis as a Service" Proceedings of Evol'08, the 4th Intl. ERCIM Workshop on Software Evolution and Evolvability at the 23rd IEEE/ACM Intl. Conf. on Automated Software Engineering, September 2008.

Contact

People interested in working with the SOFAS platform or even collaborating on it are invited to contact Prof. Harald Gall or Giacomo Ghezzi.