Dr. Panichella and Grano

Contacts:

PI: Dr. Sebastiano Panichella, Prof. Dr. Harald Gall

Ph.D. Students:

Giovanni Grano

Carmine Vassallo

---------------------------------------------------------------------------------------------------------

The main CONTENT of this page:

(1) main advances/outcomes of in this project

(linking the accepted papers in the project), compared to the state-of-the-art. - Then we report the

- (

2) Work Packages (WPs)

of the proposal (linking the accepted papers to the WPs),

(3) the accepted papers

(and the associated slides), (4) tools, and datasets

(5) AWARDS

---------------------------------------------------------------------------------------------------------

Description of the project:

The SURF-MobileAppsData project will investigate concepts, techniques, and tools for mining mobile apps data available in app stores to support software engineers in the maintenance and evolution activities for these apps. In particular, the goal of mining data of mobile apps is to build an analysis framework and a feedback-driven environment to help developers to build better mobile applications by supporting them to (i) shorten the development life cycle, and (ii) to accommodate actual user needs. Hence, the main purpose of the SURF-MobileAppsData project is to surf a large amount of data that characterize any app in an app store with the aim of substantially advancing the current state-of-the-art in mining mobile apps in several novel directions: by providing a multi-level, multi-source feedback mechanism for developers and users; by devising means for multi-source interlinking of user requests and actual changes; and by better wiring up feature development and bug fixing.

Duration: September 2016 - August 2019

Funding: SNF (Total Costs: 349.926 CHF)

General Achievements of the PI:

- According to the [Results reported by the JSS journal], during the period of funding of this project, Dr. Panichella was selected as one of the top-20 (second in Switzerland) Most Active Early Stage Researchers in Software Engineering (SE). We take this opportunity to thank the SNF for supporting our research in SE and mobile computing with the project "SURF-MobileAppsData SNF project".

- The paper [Sebastiano Panichella, Andrea Di Sorbo, Emitza Guzman, Corrado Aaron Visaggio, Gerardo Canfora, Harald C. Gall: How can I improve my app? Classifying user reviews for software maintenance and evolution. ICSME 2015: 281-290], which originated the idea behind this SNF project, is one of the most cited papers of ICMSE 2015 (as reported in Google scholar), with over 130 citations in around 4 years.

- The paper ICPC wrote during the bachelor studies of Dr. Panichella-[Giovanni Capobianco, Andrea De Lucia, Rocco Oliveto, Annibale Panichella, Sebastiano Panichella: On the role of the nouns in IR-based traceability recovery. ICPC 2009: 148-157] is among the most influential papers of ICPC in the last decade [period 2009-2019].

Collaborations established by Dr. Sebastiano Panichella

The main CONTENT of this page:

- we describe the

(1) main advances/outcomes of in this project

(linking the accepted papers in the project), compared to the state-of-the-art.

- Then we report the

- (

2) Work Packages (WPs)

of the proposal (linking the accepted papers to the WPs),

(3) the accepted papers

(and the associated slides), (4) tools, and datasets

.

---------------------------------------------------------------------------------------------------------

(1) Main advances/outcomes of this project (compared to the state-of-the-art)

Here we provide a brief overview of (i) the contemporary development pipeline of mobile applications and (ii) the advances/outcomes made in this project compared to the state-of-the-art. Papers are highlighted with C and J.

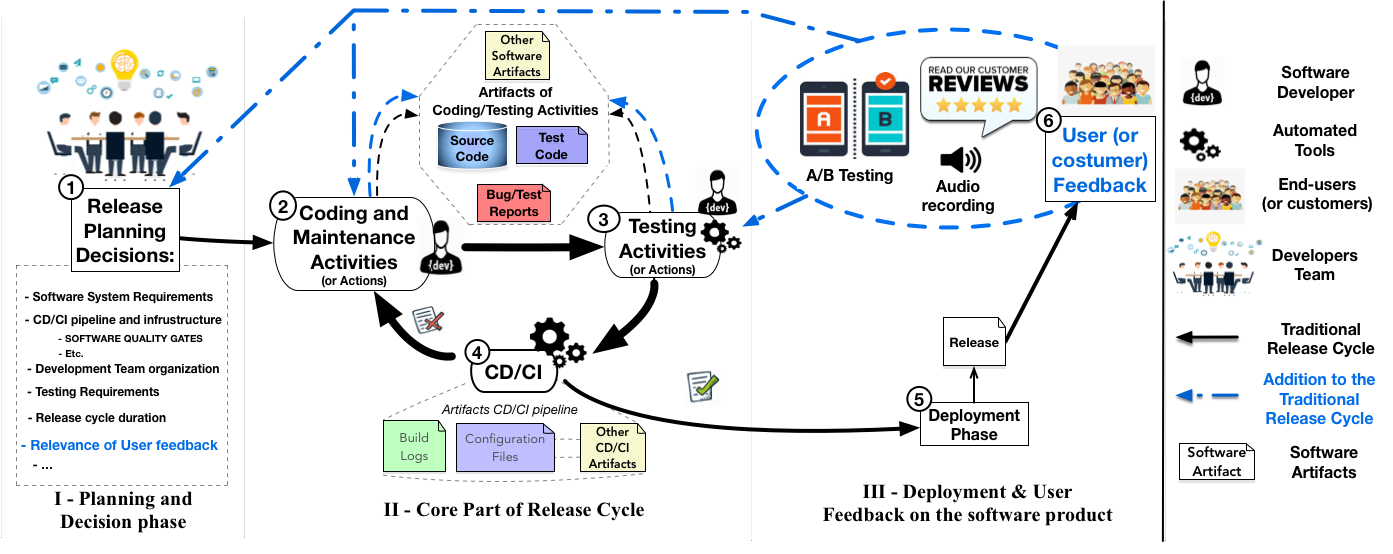

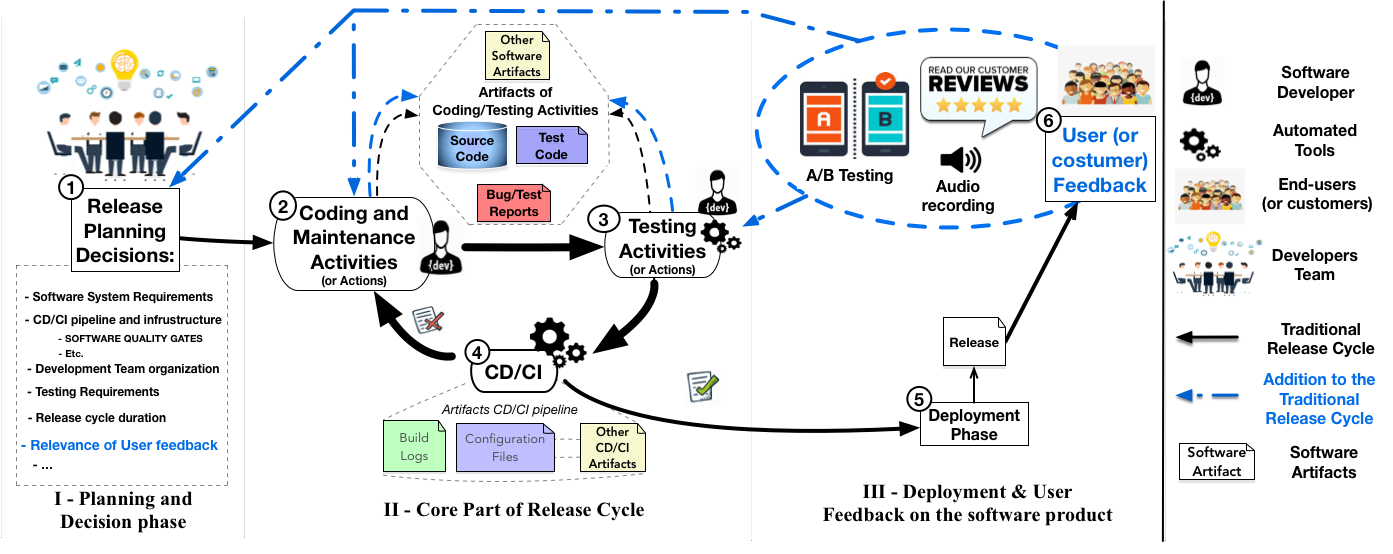

Development Release Cycle of Mobile Apps.

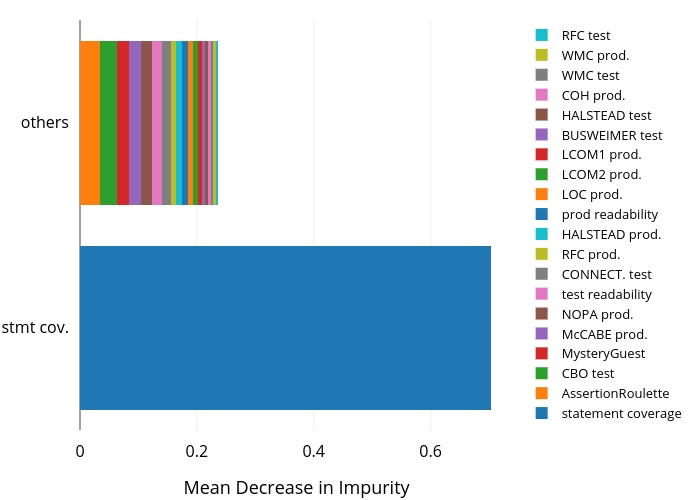

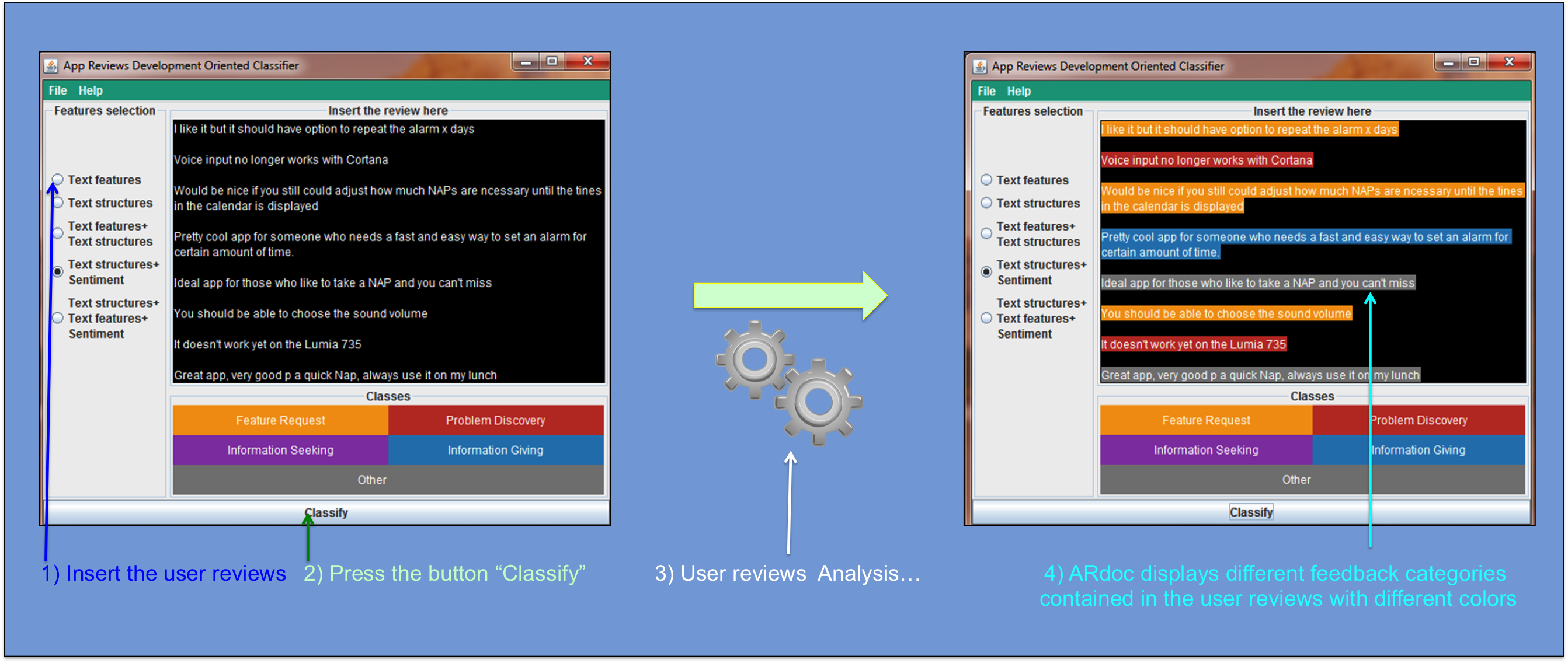

As shown in Figure 1, the conventional mobile software release cycle has evolved in recent years into a more complex process, integrating DevOps software engineering practices. Continuous Delivery (CD) is one of the most emerging DevOps software development practices, in which developers' source/test code changes are sent to server machines to automate all software integration (e.g., building and integration testing) tasks required for the delivery. When this automated process fails (known as “build failure” [C31]), developers are forced to go back to coding to discover and fix the root cause of the failure [C31, C33, C40]; otherwise, the changes are released to production in short cycles. These software changes are then notified to users as new updates of their mobile apps. In this context, users usually provide feedback on the new version (or the A/B testing versions) of the apps installed on their devices, this often in form of comments in app reviews [C23, C24, C26, C28, C29, C32, C37, C38].

Figure 1.

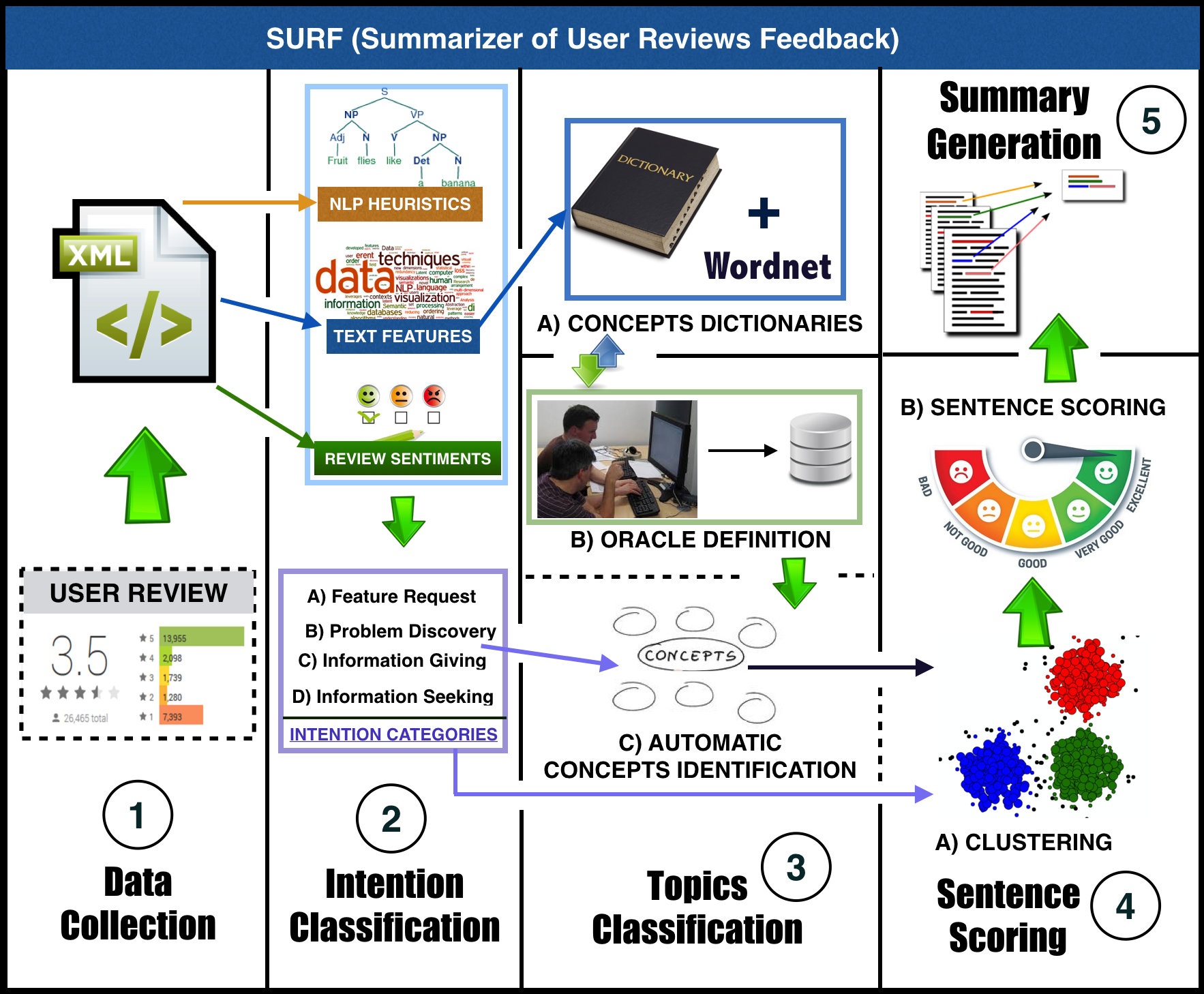

User Feedback Analysis in the Mobile Context.

Mobile user feedback stored in different forms (e.g., user reviews, video recorded, A/B testing strategies, etc.), can be used by developers to decide possible future directions of development or maintenance activities. Therefore, user feedback represents a valuable resource to evolve software applications [C23, C24, C26, C27, C28, C29, C32, C34, C36, C37, C38, C44, J7].

As consequence, the mobile development would strongly benefit from integrating User Feedback in the Loop (UFL) of the release cycle [C23] (as highlighted by the blue elements/lines shown in Fig. 1), especially on the testing and maintenance activities. This has pushed us to study more effective automated solutions to “enable the collection and integration of user feedback information in the development process” [C23, C24, C28, C29, C32, C34, C36, C37, C38]. The key idea of the techniques for user feedback analysis is to model [C23, C24, C26, C28, C29, C32, C34, C36, C37, C38], classify [C23, C24, C26, C29, C32, C34, C36, C37, C38], summarize [C23, C38] or cluster [C28] user feedback in order to integrate them into the release cycle. The research challenge is to effectively extract useful feedback to actively support the developers to accomplish different release cycle tasks [C23, C24, C26, C27, C28, C29, C32, C34, C36, C37, C38, C44, J7].

Mobile Testing and Source Code Localization based on User Feedback Analysis.

User feedback analysis can potentially provide to developers information about the changes to perform to achieve better user satisfaction and mobile app success. However, user reviews analysis alone is not sufficient to concretely help developers to continuously integrate user feedback information in the release cycle, and in particular (i) maintenance [C23, C24, C26, C27, C28, C29, C32, C34, C37, C38, C40, C44, J7, J6] and (ii) testing [J8, C41, C39, C36, C35, C34] activities.

Recent research directions push the boundaries of user feedback analysis in the direction of “change-request file localization” [C38, C37, C28, C26], "defect detection" [C27, C44, J7], and “user-oriented testing” (where user feedback are systematically integrated into the testing process) [C34, C36, C38, C35, J8, C41].

Finally, other ongoing empirical work was conducted to better understand contemporary development challenges [C31, C33, C38, J6, C40] to better support mobile maintenance tasks.

-------------------------------------

(2) Work packages (WPs):

Multi-source Interlinking Mechanism. This track focuses on using the novel types of data created through the first track to conceive novel techniques to interlink customers requests (the implementation of new features, bug fixing, or the improvement of existing features) and the source code entities (or components). Its goal is to define a new mechanism that (i) links maintenance tasks mined from user feedback in app stores with the source code entities (or components) that should be changed to answer such requests; (ii) the interlinking between code concerns highlighted in the previous track and the software artifacts that should be actually changed to fix these problems.

Papers linked to this WP: [C23], [C24], [C26], [C28], [C32],

Spotting Security Risk, Legal Issues & Other Concerns. This track focuses on using the novel types of data created through the previous two tracks in order to support developers to prevent the delivery of low-quality apps by (i) directly spotting vulnerabilities of mobile software (before or after its delivery) and (ii) by identifying and suggesting to developers the legal issues related to software mobility that may occur in mobile applications. Moreover, we develop novel strategies based on dynamic and static analysis to suggest possible solutions directly consumable by developers to handle the recurrent memory and energy usage issues (whose related the source code entities are located in the previous track).

Papers linked to this WP: [C44], [C27], [C34], [C35], [C36], [40]

Linking Developers & Feedback-mechanisms. This track focuses on using the novel types of data created through all the previous tracks for the depiction of historical facts coming from diverse sources. The information will be described and presented in a developer-centric way, depending on the role played by each mobile developer (tester, architect etc.), and the task to perform in the context of mobile software development (e.g. testing the app, perform bug fixing or a feature enhancement etc.). In this track we also plan to define a learning approach that determines which kind of user feedback were and were not addressed by developers in the past; then, this historical information will be used in order to suggest and present earlier to the developers those incoming user-feedback that are more likely to be addressed.

Papers linked to this WP: [C31], [C33], [J6],[J8], [39], [41], [42], [43], [44], [J7]

A Feedback-mechanism for Users. In this last track, we are interested in enabling a feedback mechanism for the users, which might be interested in being alerted when developers are performing maintenance and development tasks. Specifically, users are interested to know whether their feedback is directly taken into account by developers during app maintenance, e.g., whether developers are implementing the required new features or whether the developers are fixing specific bugs highlighted in previous user reviews. This track will use the multi-source Interlinking implemented in the second track (and extended in the subsequent tracks) to automatically enable a feedback mechanism to alert the users when the app developers are addressing their requests. In addition, we also plan to include an alert-mechanism which highlights the users the potential violation and legal issues related to app mobility.

Paper linked to this WP: [C29], [C37], [C38]

-------------------------------------

indicates ZENODO repositories, GitHub Repositories, and web pages linking to Dataset, Tools and or Replication packages of the published papers.

indicates ZENODO repositories, GitHub Repositories, and web pages linking to Dataset, Tools and or Replication packages of the published papers. refer to the SLIDES of the published papers.

refer to the SLIDES of the published papers.