Navigation auf uzh.ch

Navigation auf uzh.ch

Event cameras such as the Dynamic Vision Sensor (DVS) are bio-inspired vision sensors that output pixel-level brightness changes instead of standard intensity frames. They offer significant advantages over standard cameras, namely a very high dynamic range, no motion blur, and a latency in the order of microseconds. However, because the output is composed of a sequence of asynchronous events rather than actual intensity images, traditional vision algorithms cannot be applied, so that new algorithms that exploit the high temporal resolution and the asynchronous nature of the sensor are required.

Do you want to know more about event cameras or play with them? Check out our tutorial on event cameras and our event-camera dataset, which also includes intensity images, IMU, ground truth, synthetic data, as well as an event-camera simulator!

References

|

|

G. Gallego, T. Delbruck, G. Orchard, C. Bartolozzi, B. Taba, A. Censi, S. Leutenegger, A. Davison, J. Conradt, K. Daniilidis, D. Scaramuzza Event-based Vision: A Survey arXiv, 2019. |

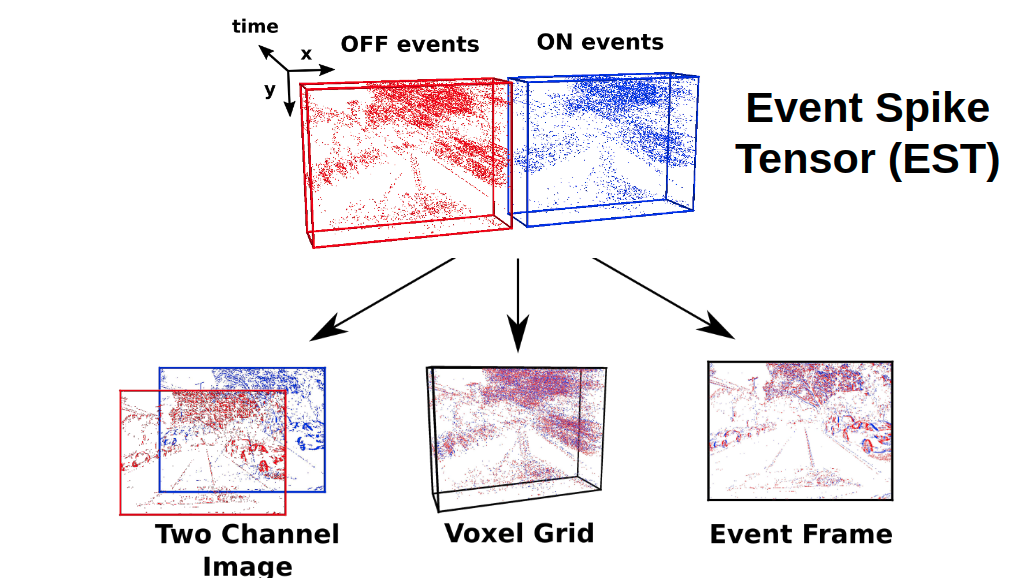

Event cameras are vision sensors that record asynchronous streams of per-pixel brightness changes, referred to as "events". They have appealing advantages over frame-based cameras for computer vision, including high temporal resolution, high dynamic range, and no motion blur. Due to the sparse, non-uniform spatiotemporal layout of the event signal, pattern recognition algorithms typically aggregate events into a grid-based representation and subsequently process it by a standard vision pipeline, e.g., Convolutional Neural Network (CNN). In this work, we introduce a general framework to convert event streams into grid-based representations through a sequence of differentiable operations. Our framework comes with two main advantages: (i) allows learning the input event representation together with the task dedicated network in an end to end manner, and (ii) lays out a taxonomy that unifies the majority of extant event representations in the literature and identifies novel ones. Empirically, we show that our approach to learning the event representation end-to-end yields an improvement of approximately 12% on optical flow estimation and object recognition over state-of-the-art methods.

References

|

|

D. Gehrig, A. Loquercio, K. G. Derpanis, D. Scaramuzza End-to-End Learning of Representations for Asynchronous Event-Based Data (Under review) |

References

|

|

G. Gallego, M. Gehrig, D. Scaramuzza Focus Is All You Need: Loss Functions for Event-based Vision Compensation IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, 2019. |

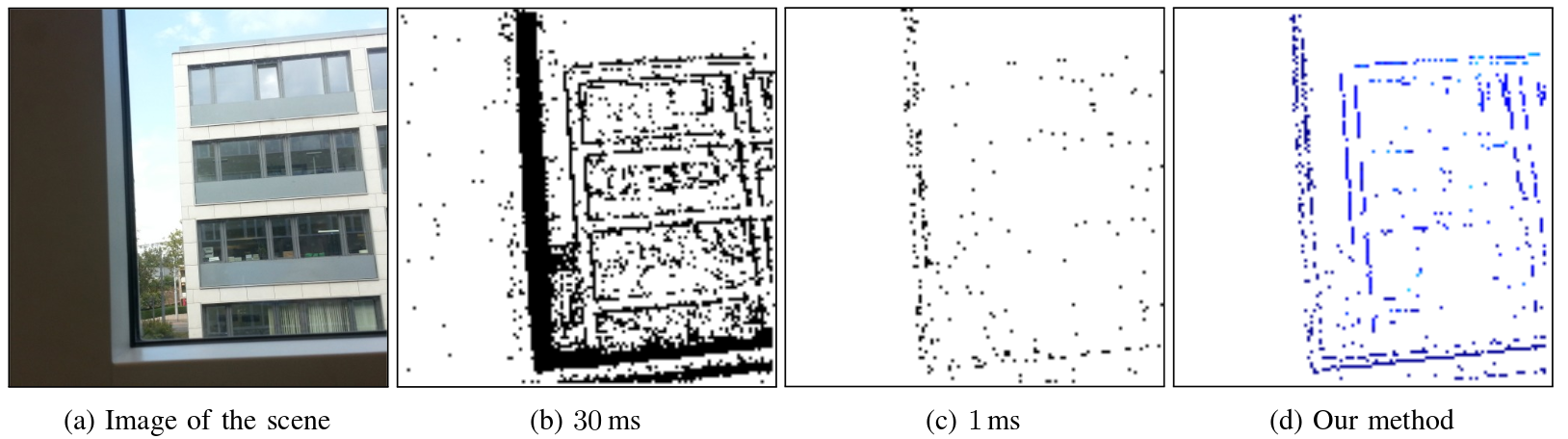

In contrast to traditional cameras, whose pixels have a common exposure time, event-based cameras are novel bio-inspired sensors whose pixels work independently and asynchronously output intensity changes (called "events"), with microsecond resolution. Since events are caused by the apparent motion of objects, event-based cameras sample visual information based on the scene dynamics and are, therefore, a more natural fit than traditional cameras to acquire motion, especially at high speeds, where traditional cameras suffer from motion blur. However, distinguishing between events caused by different moving objects and by the camera's ego-motion is a challenging task. We present the first per-event segmentation method for splitting a scene into independently moving objects. Our method jointly estimates the event-object associations (i.e., segmentation) and the motion parameters of the objects (or the background) by maximization of an objective function, which builds upon recent results on event-based motion-compensation. We provide a thorough evaluation of our method on a public dataset, outperforming the state-of-the-art by as much as 10%. We also show the first quantitative evaluation of a segmentation algorithm for event cameras, yielding around 90% accuracy at 4 pixels relative displacement.

References

|

T. Stoffregen, G. Gallego, T. Drummond, L. Kleeman, D. Scaramuzza Event-Based Motion Segmentation by Motion Compensation arXiv, 2019. |

Event cameras are novel bio-inspired vision sensors that output pixel-level intensity changes, called "events", instead of traditional video images. These asynchronous sensors naturally respond to motion in the scene with very low latency (in the order of microseconds) and have a very high dynamic range. These features, along with a very low power consumption, make event cameras an ideal sensor for fast robot localization and wearable applications, such as AR/VR and gaming. Considering these applications, we present a method to track the 6-DOF pose of an event camera in a known environment, which we contemplate to be described by a photometric 3D map (i.e., intensity plus depth information) built via classic dense 3D reconstruction algorithms. Our approach uses the raw events, directly, without intermediate features, within a maximum-likelihood framework to estimate the camera motion that best explains the events via a generative model. We successfully evaluate the method using both simulated and real data, and show improved results over the state of the art. We release the datasets to the public to foster reproducibility and research in this topic.

References

|

|

S. Bryner, G. Gallego, H. Rebecq, D. Scaramuzza Event-based, Direct Camera Tracking from a Photometric 3D Map using Nonlinear Optimization IEEE International Conference on Robotics and Automation (ICRA), 2019. |

Event cameras measure changes of intensity asynchronously, in the form of a stream of events, which encode per-pixel brightness changes. In the last few years, their outstanding properties (asynchronous sensing, no motion blur, high dynamic range) have led to exciting vision applications, with very low-latency and high robustness. However, these sensors are still scarce and expensive to get, slowing down progress of the research community. To address these issues, there is a huge demand for cheap, high-quality synthetic, labeled event for algorithm prototyping, deep learning and algorithm benchmarking. The development of such a simulator, however, is not trivial since event cameras work fundamentally differently from frame-based cameras. We present the first event camera simulator that can generate a large amount of reliable event data. The key component of our simulator is a theoretically sound, adaptive rendering scheme that only samples frames when necessary, through a tight coupling between the rendering engine and the event simulator. We release ESIM as open source.

|

|

H. Rebecq, D. Gehrig, D. Scaramuzza ESIM: an Open Event Camera Simulator Conference on Robot Learning (CoRL), Zurich, 2018. |

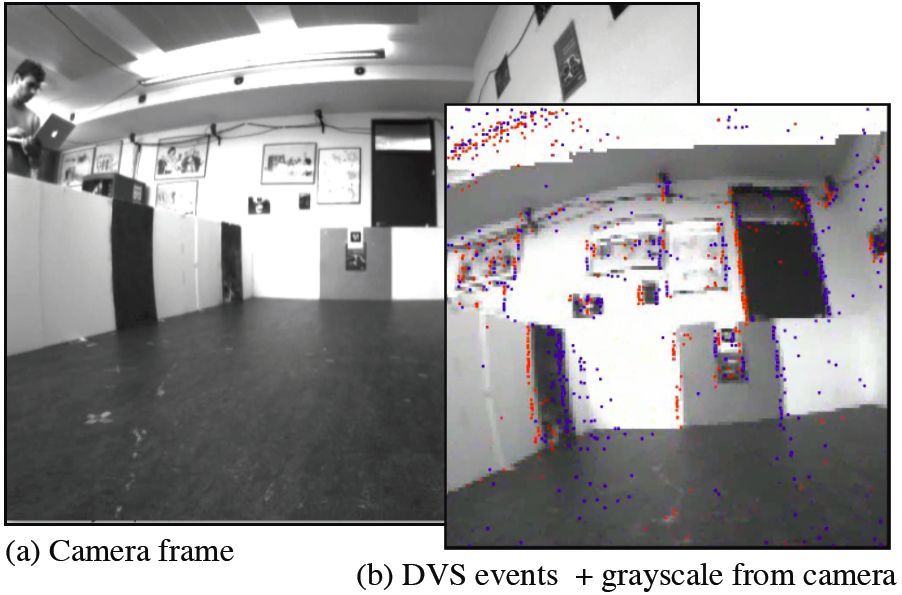

We present a method that leverages the complementarity of event cameras and standard cameras to track visual features with low latency. Event cameras are novel sensors that output pixel-level brightness changes, called "events". They offer significant advantages over standard cameras, namely a very high dynamic range, no motion blur, and a latency in the order of microseconds. However, because the same scene pattern can produce different events depending on the motion direction, establishing event correspondences across time is challenging. By contrast, standard cameras provide intensity measurements (frames) that do not depend on motion direction. Our method extracts features on frames and subsequently tracks them asynchronously using events, thereby exploiting the best of both types of data: the frames provide a photometric representation that does not depend on motion direction and the events provide low latency updates. In contrast to previous works, which are based on heuristics, this is the first principled method that uses raw intensity measurements directly, based on a generative event model within a maximum-likelihood framework. As a result, our method produces feature tracks that are both more accurate (subpixel accuracy) and longer than the state of the art, across a wide variety of scenes.

|

|

D. Gehrig, H. Rebecq, G. Gallego, D. Scaramuzza Asynchronous, Photometric Feature Tracking using Events and Frames European Conference on Computer Vision (ECCV), Munich, 2018. Oral Presentation. Oral Acceptance Rate: 2.4 % PDF (PDF, 5 MB) Poster (PDF, 1 MB) YouTube Oral presentation Evaluation Code |

Event cameras are bio-inspired sensors that offer several advantages, such as low latency, high-speed and high dynamic range, to tackle challenging scenarios in computer vision. This paper presents a solution to the problem of 3D reconstruction from data captured by a stereo event-camera rig moving in a static scene, such as in the context of stereo Simultaneous Localization and Mapping. The proposed method consists of the optimization of an energy function designed to exploit small-baseline spatio-temporal consistency of events triggered across both stereo image planes. To improve the density of the reconstruction and to reduce the uncertainty of the estimation, a probabilistic depth-fusion strategy is also developed. The resulting method has no special requirements on either the motion of the stereo event-camera rig or on prior knowledge about the scene. Experiments demonstrate our method can deal with both texture-rich scenes as well as sparse scenes, outperforming state-of-the-art stereo methods based on event data image representations.

|

|

Y. Zhou, G. Gallego, H. Rebecq, L. Kneip, H. Li, D. Scaramuzza Semi-Dense 3D Reconstruction with a Stereo Event Camera European Conference on Computer Vision (ECCV), Munich, 2018. |

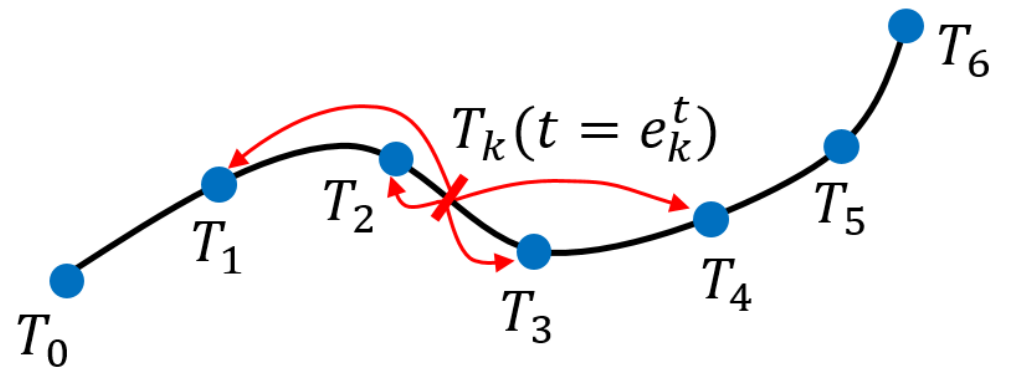

In this paper, we leverage a continuous-time framework to perform visual-inertial odometry with an event camera. This framework allows direct integration of the asynchronous events with micro-second accuracy and the inertial measurements at high frequency. The event camera trajectory is approximated by a smooth curve in the space of rigid-body motions using cubic splines. This formulation significantly reduces the number of variables in trajectory estimation problems. We evaluate our method on real data from several scenes and compare the results against ground truth from a motion-capture system. We show that our method provides improved accuracy over the result of a state-of-the-art visual odometry method for event cameras. We also show that both the map orientation and scale can be recovered accurately by fusing events and inertial data. To the best of our knowledge, this is the first work on visual-inertial fusion with event cameras using a continuous-time framework.

|

|

Continuous-Time Visual-Inertial Odometry for Event Cameras IEEE Transactions on Robotics, 2018. |

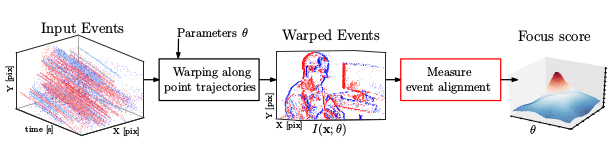

We present a unifying framework to solve several computer vision problems with event cameras: motion, depth and optical flow estimation. The main idea of our framework is to find the point trajectories on the image plane that are best aligned with the event data by maximizing an objective function: the contrast of an image of warped events. Our method implicitly handles data association between the events, and therefore, does not rely on additional appearance information about the scene. In addition to accurately recovering the motion parameters of the problem, our framework produces motion-corrected edge-like images with high dynamic range that can be used for further scene analysis. The proposed method is not only simple, but more importantly, it is, to the best of our knowledge, the first method that can be successfully applied to such a diverse set of important vision tasks with event cameras.

|

|

A Unifying Contrast Maximization Framework for Event Cameras, with Applications to Motion, Depth and Optical Flow Estimation IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, 2018. Spotlight Presentation. |

Event cameras are bio-inspired vision sensors that naturally capture the dynamics of a scene, filtering out redundant information. This paper presents a deep neural network approach that unlocks the potential of event cameras on a challenging motion-estimation task: prediction of a vehicle's steering angle. To make the best out of this sensor-algorithm combination, we adapt state-of-the-art convolutional architectures to the output of event sensors and extensively evaluate the performance of our approach on a publicly available large scale event-camera dataset (~1000 km). We present qualitative and quantitative explanations of why event cameras allow robust steering prediction even in cases where traditional cameras fail, e.g. challenging illumination conditions and fast motion. Finally, we demonstrate the advantages of leveraging transfer learning from traditional to event-based vision, and show that our approach outperforms state-of-the-art algorithms based on standard cameras.

|

|

Event-based Vision meets Deep Learning on Steering Prediction for Self-driving Cars IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, 2018. |

In this paper, we present the first state estimation pipeline that leverages the complementary advantages of a standard camera with an event camera by fusing in a tightly-coupled manner events, standard frames, and inertial measurements. We show on the Event Camera Dataset that our hybrid pipeline leads to an accuracy improvement of 130% over event-only pipelines, and 85% over standard-frames only visual-inertial systems, while still being computationally tractable.

Furthermore, we use our pipeline to demonstrate - to the best of our knowledge - the first autonomous quadrotor flight using an event camera for state estimation, unlocking flight scenarios that were not reachable with traditional visual inertial odometry, such as low-light environments and high dynamic range scenes.

|

|

Ultimate SLAM? Combining Events, Images, and IMU for Robust Visual SLAM in HDR and High Speed Scenarios IEEE Robotics and Automation Letters (RA-L), 2018. PDF (PDF, 822 KB) YouTube ICRA18 Video Pitch Poster (PDF, 1 MB) Project Webpage |

We introduce the problem of event-based multi-view stereo (EMVS) for event cameras and propose a solution to it. Unlike traditional MVS methods, which address the problem of estimating dense 3D structure from a set of known viewpoints, EMVS estimates semi-dense 3D structure from an event camera with known trajectory. Our EMVS solution elegantly exploits two inherent properties of an event camera: (1) its ability to respond to scene edges - which naturally provide semi-dense geometric information without any preprocessing operation - and (2) the fact that it provides continuous measurements as the sensor moves. Despite its simplicity (it can be implemented in a few lines of code), our algorithm is able to produce accurate, semi-dense depth maps, without requiring any explicit data association or intensity estimation. We successfully validate our method on both synthetic and real data. Our method is computationally very efficient and runs in real-time on a CPU. We release the source code.

|

|

EMVS: Event-Based Multi-View Stereo - 3D Reconstruction with an Event Camera in Real-Time International Journal of Computer Vision, 2017. |

|

|

EMVS: Event-based Multi-View Stereo British Machine Vision Conference (BMVC), York, 2016. Best Industry Paper Paper Award! Oral Talk: Acceptance Rate 7% |

We present an event-based approach for ego-motion estimation, which provides pose updates upon the arrival of each event, thus virtually eliminating latency. Our method is the first work addressing and demonstrating event-based pose tracking in six degrees-of-freedom (DOF) motions in realistic and natural scenes, and it is able to track high-speed motions. The method is successfully evaluated in both indoor and outdoor scenes.

|

|

Event-based, 6-DOF Camera Tracking from Photometric Depth Maps IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017. (In press) |

We propose a novel, accurate tightly-coupled visual-inertial odometry pipeline for event cameras that leverages their outstanding properties to estimate the camera ego-motion in challenging conditions, such as high-speed motion or high dynamic range scenes. Our pipeline can output poses at a rate proportional to the camera velocity and runs in real-time on a CPU.

The method tracks a set of features (extracted on the image plane) through time. To achieve that, we consider events in overlapping spatio-temporal windows and align them using the current camera motion and scene structure, yielding motion-compensated event frames. We then combine these feature tracks in a keyframe-based, visual-inertial odometry algorithm based on nonlinear optimization to estimate the camera's 6-DOF pose, velocity, and IMU biases.

We evaluated the proposed method quantitatively on the public Event Camera Dataset and it significantly outperforms the state-of-the-art, while being computationally much more efficient: our pipeline can run much faster than real-time on a laptop and even on a smartphone processor. Furthermore, we demonstrate qualitatively the accuracy and robustness of our pipeline on a large-scale dataset, and an extremely high-speed dataset recorded by spinning an event camera on a leash at 850 deg/s.

|

|

Real-time Visual-Inertial Odometry for Event Cameras using Keyframe-based Nonlinear Optimization British Machine Vision Conference (BMVC), London, 2017. Oral Presentation. Acceptance Rate: 5.6% |

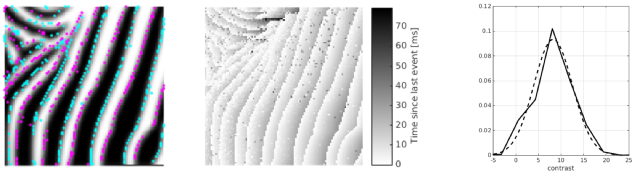

Inspired by frame-based pre-processing techniques that reduce an image to a set of features, which are typically the input to higher-level algorithms, we propose a method to reduce an event stream to a corner event stream. Our goal is twofold: extract relevant tracking information (corners do not suffer from the aperture problem) and decrease the event rate for later processing stages. Our event-based corner detector is very efficient due to its design principle, which consists of working on the Surface of Active Events (a map with the timestamp of the latest event at each pixel) using only comparison operations. Our method asynchronously processes event by event with very low latency. Our implementation is capable of processing millions of events per second on a single core (less than a micro-second per event) and reduces the event rate by a factor of 10 to 20.

|

|

Fast Event-based Corner Detection British Machine Vision Conference (BMVC), London, 2017. PDF (PDF, 1 MB) Poster (PDF, 1008 KB) YouTube Open-Source Code |

We present EVO, an Event-based Visual Odometry algorithm. Our algorithm successfully leverages the outstanding properties of event cameras to track fast camera motions while recovering a semi-dense 3D map of the environment. The implementation runs in real-time on a standard CPU and outputs up to several hundred pose estimates per second. Due to the nature of event cameras, our algorithm is unaffected by motion blur and operates very well in challenging, high dynamic range conditions with strong illumination changes. To achieve this, we combine a novel, event-based tracking approach based on image-to-model alignment with a recent event-based 3D reconstruction algorithm in a parallel fashion. Additionally, we show that the output of our pipeline can be used to reconstruct intensity images from the binary event stream, though our algorithm does not require such intensity information. We believe that this work makes significant progress in SLAM by unlocking the potential of event cameras. This allows us to tackle challenging scenarios that are currently inaccessible to standard cameras.

|

|

EVO: A Geometric Approach to Event-based 6-DOF Parallel Tracking and Mapping in Real-time IEEE Robotics and Automation Letters (RA-L), 2016. |

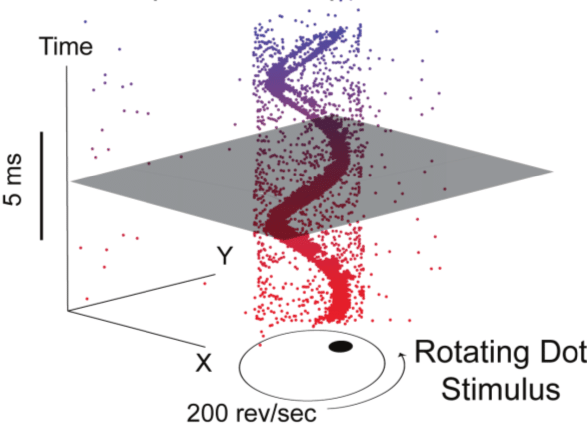

We present an algorithm to estimate the rotational motion of an event camera. In contrast to traditional cameras, which produce images at a fixed rate, event cameras have independent pixels that respond asynchronously to brightness changes, with microsecond resolution. Our method leverages the type of information conveyed by these novel sensors (that is, edges) to directly estimate the angular velocity of the camera, without requiring optical flow or image intensity estimation. The core of the method is a contrast maximization design. The method performs favorably against round truth data and gyroscopic measurements from an Inertial Measurement Unit, even in the presence of very high-speed motions (close to 1000 deg/s).

|

|

Accurate Angular Velocity Estimation with an Event Camera IEEE Robotics and Automation Letters (RA-L), 2016. |

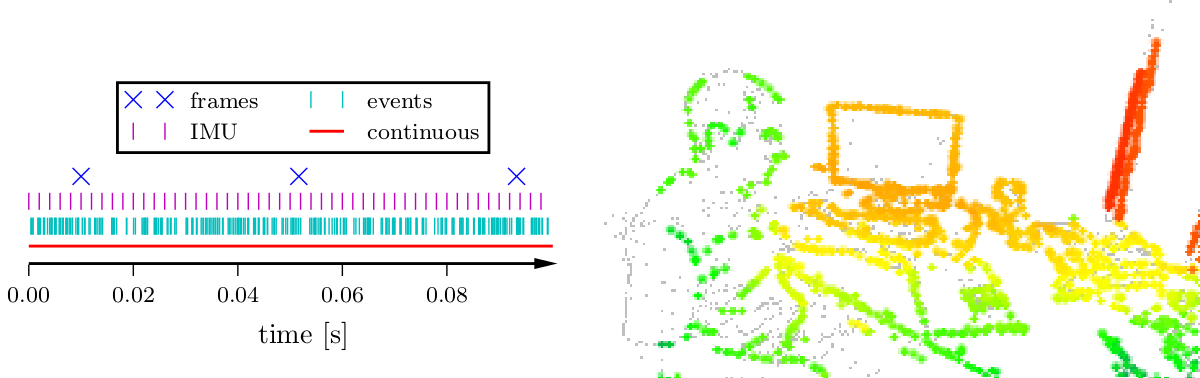

We present the world's first collection of datasets with an event-based camera for high-speed robotics. The data also include intensity images, inertial measurements, and ground truth from a motion-capture system. An event-based camera is a revolutionary vision sensor with three key advantages: a measurement rate that is almost 1 million times faster than standard cameras, a latency of 1 microsecond, and a high dynamic range of 130 decibels (standard cameras only have 60 dB). These properties enable the design of a new class of algorithms for high-speed robotics, where standard cameras suffer from motion blur and high latency. All the data are released both as text files and binary (i.e., rosbag) files. Find out more on the dataset website!

|

|

The Event-Camera Dataset and Simulator: Event-based Data for Pose Estimation, Visual Odometry, and SLAM International Journal of Robotics Research, Vol. 36, Issue 2, pages 142-149, Feb. 2017. |

|

|

|

We develop an event-based feature tracking algorithm for the DAVIS sensor and show how to integrate it in an event-based visual odometry pipeline. Features are first detected in the grayscale frames and then tracked asynchronously using the stream of events. The features are then fed to an event-based visual odometry pipeline that tightly interleaves robust pose optimization and probabilistic mapping. We show that our method successfully tracks the 6-DOF motion of the sensor in natural scenes (see video above).

|

|

Low-Latency Visual Odometry using Event-based Feature Tracks IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, 2016. Best Application Paper Award Finalist! Highlight Talk: Acceptance Rate 2.5% |

|

|

Feature Detection and Tracking with the Dynamic and Active-pixel Vision Sensor (DAVIS) International Conference on Event-Based Control, Communication and Signal Processing (EBCCSP), Krakow, 2016. |

|

|

ELiSeD - An Event-Based Line Segment Detector International Conference on Event-Based Control, Communication and Signal Processing (EBCCSP), Krakow, 2016. |

In this paper, we address ego-motion estimation for an event-based vision sensor using a continuous-time framework to directly integrate the information conveyed by the sensor. The DVS pose trajectory is approximated by a smooth curve in the space of rigid-body motions using cubic splines and it is optimized according to the observed events. We evaluate our method using datasets acquired from sensor-in-the-loop simulations and onboard a quadrotor performing flips. The results are compared to the ground truth, showing the good performance of the proposed technique.

|

|

Continuous-Time Trajectory Estimation for Event-based Vision Sensors Robotics: Science and Systems (RSS), Rome, 2015. |

We tackle the problem of event-based camera localization in a known environment, without additional sensing, using a probabilistic generative event model in a Bayesian filtering framework. Our main contribution is the design of the likelihood function used in the filter to process the observed events. Based on the physical characteristics of the sensor and on empirical evidence of the Gaussian-like distribution of spiked events with respect to the brightness change, we propose to use the contrast residual as a measure of how well the estimated pose of the event-based camera and the environment explain the observed events. The filter allows for localization in the general case of six degrees-of-freedom motions.

|

|

Event-based Camera Pose Tracking using a Generative Event Model arXiv:1510.01972, 2015. |

We develop an algorithm that augments each event with its "lifetime", which is computed from the event's velocity on the image plane. The generated stream of augmented events gives a continuous representation of events in time, hence enabling the design of new algorithms that outperform those based on the accumulation of events over fixed, artificially-chosen time intervals. A direct application of this augmented stream is the construction of sharp gradient (edge-like) images at any time instant. We successfully demonstrate our method in different scenarios, including high-speed quadrotor flips, and compare it to standard visualization methods.

|

|

Lifetime Estimation of Events from Dynamic Vision Sensors IEEE International Conference on Robotics and Automation (ICRA), Seattle, 2015. |

In the last few years, we have witnessed impressive demonstrations of aggressive flights and acrobatics using quadrotors. However, those robots are actually blind. They do not see by themselves, but through the "eyes" of an external motion capture system. Flight maneuvers using onboard sensors are still slow compared to those attainable with motion capture systems. At the current state, the agility of a robot is limited by the latency of its perception pipeline. To obtain more agile robots, we need to use faster sensors. In this paper, we present the first onboard perception system for 6-DOF localization during high-speed maneuvers using a Dynamic Vision Sensor (DVS). Unlike a standard CMOS camera, a DVS does not wastefully send full image frames at a fixed frame rate. Conversely, similar to the human eye, it only transmits pixel-level brightness changes at the time they occur with microsecond resolution, thus, offering the possibility to create a perception pipeline whose latency is negligible compared to the dynamics of the robot. We exploit these characteristics to estimate the pose of a quadrotor with respect to a known pattern during high-speed maneuvers, such as flips, with rotational speeds up to 1,200 degrees a second. Additionally, we provide a versatile method to capture ground-truth data using a DVS.

|

|

Event-based, 6-DOF Pose Tracking for High-Speed Maneuvers IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, 2014. |

This paper presents the first visual odometry system based on a DVS plus a normal CMOS camera to provide the absolute brightness values. The two sources of data are automatically spatiotemporally calibrated from logs taken during normal operation. We design a visual odometry method that uses the DVS events to estimate the relative displacement since the previous CMOS frame by processing each event individually. Experiments show that the rotation can be estimated with surprising accuracy, while the translation can be estimated only very noisily, because it produces few events due to very small apparent motion.

|

|

Low-Latency Event-Based Visual Odometry IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, 2014. |

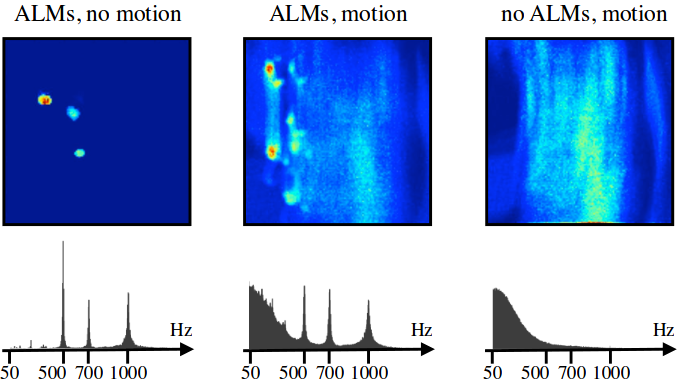

This paper presents a method for low-latency pose tracking using a DVS and Active Led Markers (ALMs), which are LEDs blinking at high frequency (>1 KHz). The sensor's time resolution allows distinguishing different frequencies, thus avoiding the need for data association. This approach is compared to traditional pose tracking based on a CMOS camera. The DVS performance is not affected by fast motion, unlike the CMOS camera, which suffers from motion blur.

|

|

Low-latency localization by Active LED Markers tracking using a Dynamic Vision Sensor IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, 2013. |