Navigation auf uzh.ch

Navigation auf uzh.ch

The Robotics and Perception Group (RPG) led by Prof. Davide Scaramuzza was funded in early 2012 and is part of the Artificial Intelligence Lab of the University of Zurich.

Our mission is to develop autonomous machines that can navigate all by themselves using only onboard sensors, and mainly cameras, without relying on external reference systems, such as GPS or motion capture systems. Our interest encompasses borh ground and micro flying robots, as well as multi-robot heterogeneous systems consisting of the combination of these two. We don't want our machines to be passive, but active, in that they should react to and navigate within the environment so as to gain the best knowledg out of it.

Our research goal is to develop teams of MAVs which can fly autonomously in city-like environments and which can be used to assist humans in tasks like rescue and monitoring. We focus on enabling autonomous navigation using vision and IMU as sole sensor modalities (i.e., no GPS, no laser).

Read more

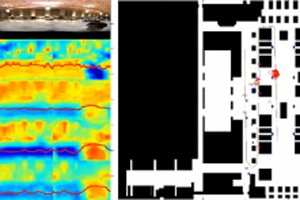

Given a car equipped with an omnidirectional camera, the motion of the vehicle can be purely recovered from salient features tracked over time. We propose the 1-Point RANSAC algorithm which runs at 800 Hz on a normal laptop. To our knowledge, this is the most efficient visual odometry algorithm.

Read more

Most of the work done in localization, mapping, and navigation for both ground and aerial vehicles has been done by means of point landmarks or occupancy grids, using vision or laser range finders. However, to make these robots one day able to cooperate with humans in complex scenarios, we need to build semantic maps of the environment.

Read more

This problem deals with the extrinsic calibration between an omnidirectional camera and 3D laser range finder. The aim is to get a precise mapping of the color information given by the camera onto the 3D points.

Read more

Here a novel method for robustly tracking vertical features taken by omnidirectional images is developed. Matching robustness is achieved by means of a feature descriptor which is invariant to rotation and slight changes of illumination.

Read more

A unified model for central omnidirectional cameras (both with mirror or fisheye) and a novel calibration method which uses checkerboard patterns. Omnidirectional Camera Calibration Toolbox for MATLAB.

Visit the OCamCalib Toolbox Website